2025 GTC Review: The Silicon Photonics Era Has Arrived – Get Ready!

Original Articles by SemiVision Research

Three Stages of AI Evolution

NVIDIA outlined three key stages of AI: Generative AI, Agentic AI, and Physical AI. To achieve each stage, three fundamental aspects must be addressed:

Solving data challenges

Solving training challenges

Scaling models effectively

The Development of AI:

AI has evolved through several phases, including Perceptive AI (such as computer vision and speech recognition), Generative AI (which has been the main focus over the past five years, learning to convert between different modalities, such as text to image), and in the future, Agentic AI and Physical AI will thrive.

Three Fundamental Elements of AI Development:

1. How to solve data problems.

2. How to train models without human intervention.

3. How to achieve scalability (the more resources, the smarter the AI).

Due to the development of Agentic AI and reasoning, current computational demand has increased by at least 100 times compared to last year. Modern AI can reason, gradually decompose problems, try different solutions, and verify answers.

The core technology of AI remains token prediction, where AI generates the next token, which is the result of the reasoning process.

A key breakthrough in training AI reasoning capabilities is reinforcement learning, where AI learns by solving known problems (e.g., mathematics, geometry, logic, science, puzzles) and receiving rewards. Currently, training data requires trillions of tokens.

Agentic AI and Physical AI are opening new markets:

Agentic AI: Capable of perception, reasoning, and autonomous decision-making, it can perform higher-level AI tasks such as web crawling, data analysis, and decision-making, driving the demand for cloud AI computing.

Physical AI: Focuses on the application of AI in the physical world, such as in robotics and autonomous driving, which will bring a new wave of demand for AI hardware.

End-user Applications: Covers industries such as industrial automation, healthcare, financial trading, smart transportation, and more.

Through reinforcement learning, AI can generate massive amounts of tokens and synthetic data.

The S-Curve concept in AI growth refers to the stages of adoption, where AI technology starts slow, accelerates rapidly, and eventually reaches maturity as the market saturates. For AI, this means we may witness significant growth in the coming years as more industries adopt the technology, followed by a plateau where the majority of enterprises and use cases have integrated AI solutions. It’s essential for SemiVision Research to consider how the industry will evolve, from early adoption to widespread application and potential maturity. This trend will significantly influence demand and technological innovations in the semiconductor industry as AI continues to expand.

AI Hardware and Datacenter Growth

Data Center Capital Expenditure Growth Trend:

According to BCG report, Hyperscalers drive growth and major investments. Technology players such as Amazon, Meta, Microsoft, and Google—will generate approximately 60% of the industry’s growth from 2023 through 2028, increasing their share of global demand for data center power from 35% to 45%. Within this time frame, the power demand share for enterprise players—companies that maintain on-premises facilities for their own use—will decline from 10% to 5%. In part, this reflects continued migration of businesses’ data to the cloud and to colocation providers. Colocation providers, which rent sites to tenants or develop specialized cloud offerings, will account for the remaining 50% of data center power demand by 2028, as hyperscalers continue to rely on them to meet growing needs.

At the GTC conference, it was mentioned that global data centers have entered the era of AI Factories. From 2025 to 2030, the total construction expenditure could reach 1 trillion USD. In the future, enterprises will shift from traditional software to Generative AI, accelerating the procurement of high-performance GPU servers.

It is expected that global data center capital expenditures will continue to grow.

There is an ongoing transition from general-purpose computing (CPU) to accelerated computing (GPU).

The concept of an AI Factory is to generate tokens and then reconfigure them into various forms of information.

Nvidia is building Digital Twins of AI factories to design and optimize these facilities before physical construction. This helps coordinate the work of suppliers, architects, contractors, and engineers, while optimizing costs and energy efficiency. In simple terms, Digital Twin is a detailed virtual representation of a physical object or system. And when we say a virtual representation, it doesn't mean just the appearance. The virtual twin will mirror its physical counterpart's real-time status and working conditions.

Read the following to understand the significance of Digital Twins.

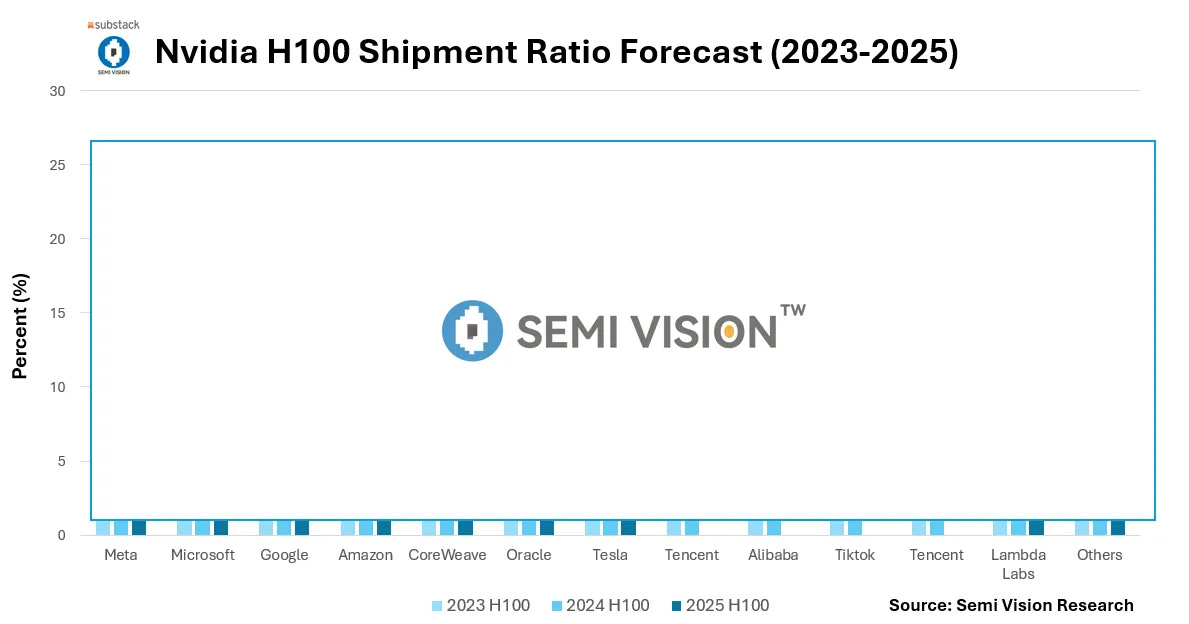

The top four cloud service providers (CSPs) purchased 1.3 million Hopper GPUs in 2024, and this number will rise to 3.6 million Blackwell GPUs in 2025.

Global datacenter capital expenditure, including investments from CSPs and enterprises, is expected to reach $1 trillion by 2028.

CUDA and AI Acceleration

Over 26 million developers in more than 200 countries use CUDA.

NVIDIA Blackwell is 50,000 times faster than the first-generation CUDA GPU, significantly reducing the gap between simulation and real-time digital twins.

AI For Every Industry

AI and Telecommunications

NVIDIA is partnering with Cisco, T-Mobile, and Service ODC to build a complete wireless network technology stack in the U.S. while integrating AI into edge computing.

The global capital expenditure on wireless networks and communication datacenters reaches $100 billion annually, with future investments shifting toward accelerated computing and AI.

Autonomous Vehicles

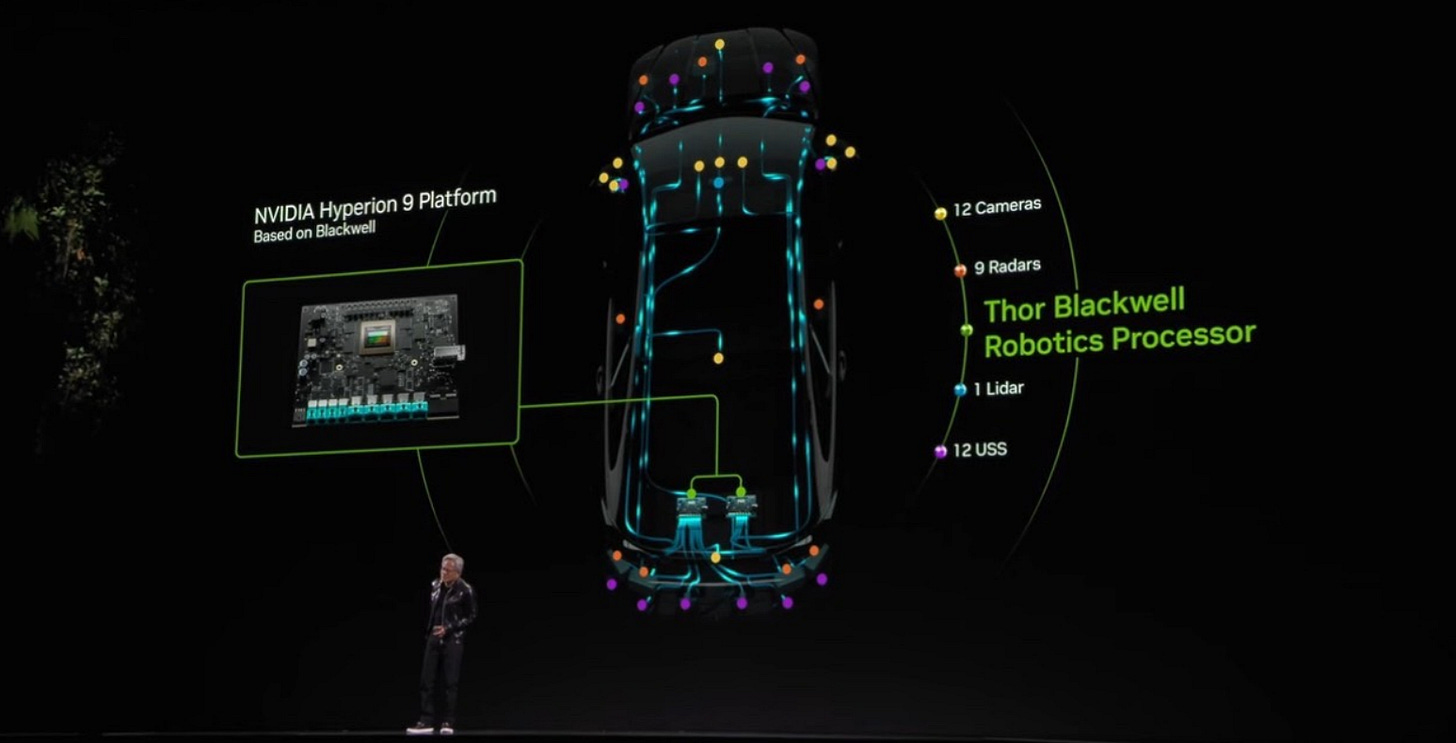

NVIDIA and General Motors (GM) are co-developing the next-generation autonomous vehicle fleet.

NVIDIA utilizes Omniverse and Cosmos to accelerate AI development for autonomous driving.

Cosmos enhances AI predictions and reasoning with end-to-end trainable frameworks using model distillation, closed-loop training, and synthetic data generation.

In 2025, NVIDIA’s “Thor” chip is highly anticipated. As autonomous driving technology progresses to higher levels (Level 3 and above), the Robotaxi market is also expected to experience explosive growth.

Blackwell and Rubin Architectures

Dynamo:

NVIDIA's open-source inference software, solving token generation limitations, with Perplexity as a key partner.

In a 1MW datacenter, 8 Hopper GPUs interconnected via InfiniBand generate ~100 tokens/s per user and 100,000 tokens/s in total.

With super batching, it reaches 2.5 million tokens/s but increases latency.

Dynamo + Blackwell achieves 40× the performance of Hopper.

NVIDIA Omniverse AI Factory

Enables digital twins for AI factories before physical construction.

NVIDIA engineers designed a 1GW AI factory integrating DGX supercomputing, power/cooling solutions from Vertiv and Schneider Electric, and NVIDIA Air for network simulation, optimizing total cost of ownership (TCO) and power usage effectiveness (PUE).

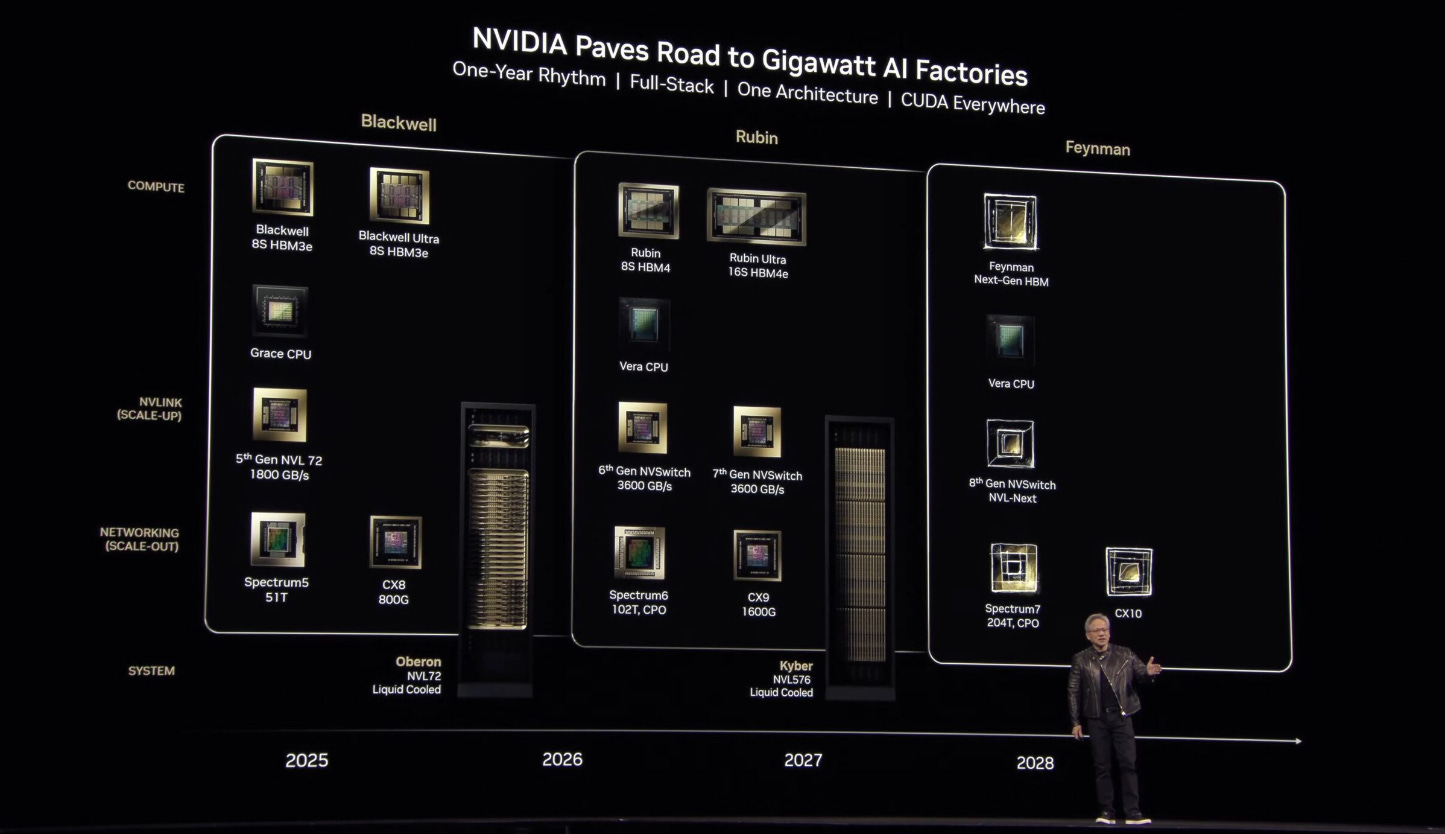

1. Blackwell:

• Offers up to 40 times the inference performance of Hopper.

• Blackwell Ultra NVL72 expected to launch in 2H25, providing 1.5x performance (1.1 Exaflops), 1.5x memory, and 2x network bandwidth (CX8).

2. Vera Rubin:

• Expected to launch in 2H26 with 144 NVLink connections.

• Provides 3.3x performance (1.1 Exaflops), 1.6x memory (HBM4), 2x NVLink bandwidth (NVLink 6), and 2x network bandwidth (CX9).

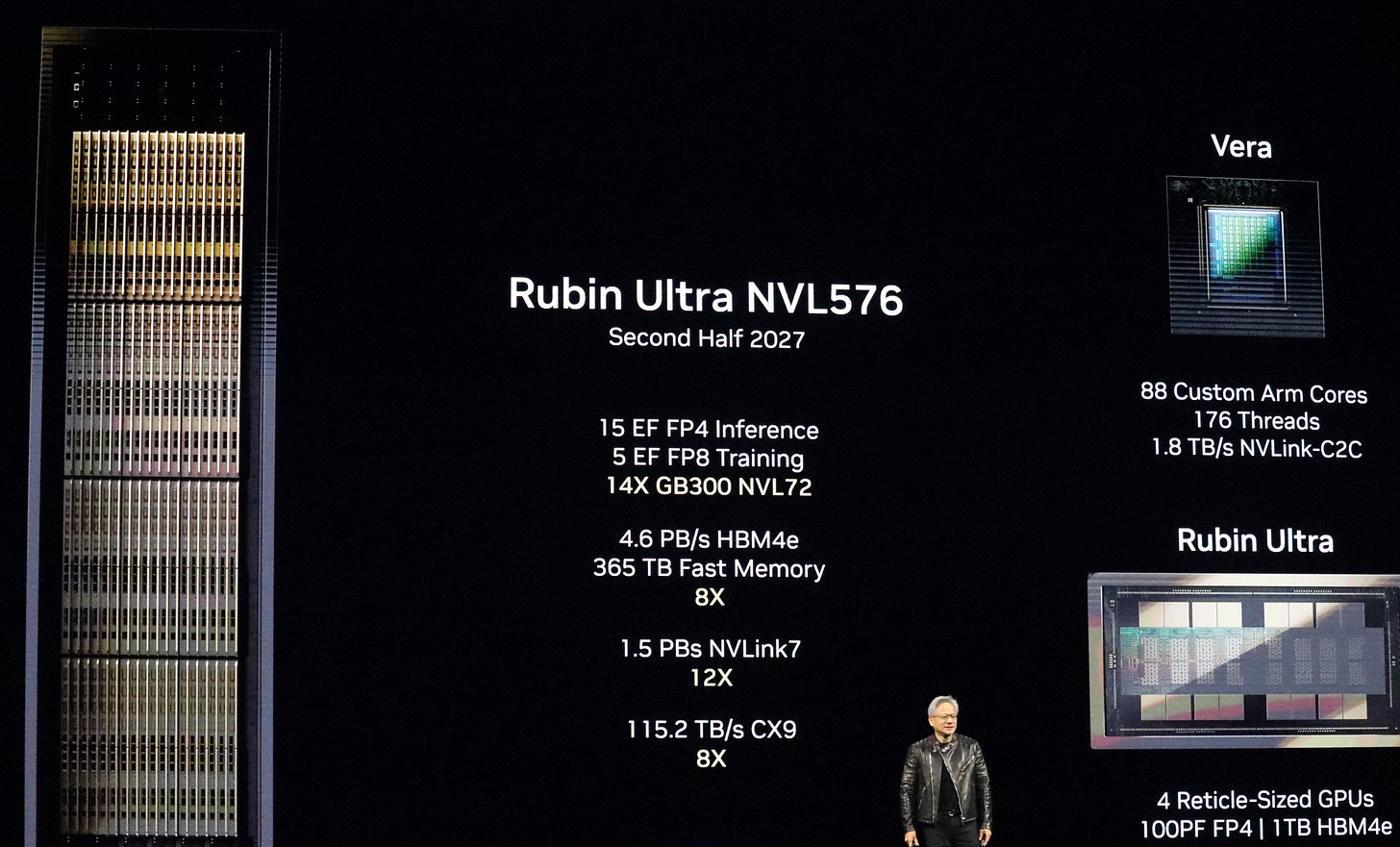

3. Rubin Ultra:

• Expected to launch in 2H27 with 576 NVLink connections.

• Provides 14x performance (15 Exaflops), 8x memory (HBM4e), 12x NVLink bandwidth (NVLink 7), and 8x network bandwidth (CX9).

• Power consumption per rack 600kW with 2.5 million components.

4. Performance Improvements:

• Blackwell provides 68x performance increase compared to Hopper.

• Rubin offers 900x performance improvement over Hopper.

• In terms of TCO (Total Cost of Ownership), Hopper has a baseline of 1x:

• Blackwell reduces TCO to 0.13x.

• Rubin reduces TCO to 0.03x.

5. Next-Generation Rubin Product:

• The next-generation Rubin product is named Feynman.

• Rubin introduces the world’s first 1.6T CPO system, using micro-ring resonator modulation technology. It significantly reduces power consumption compared to traditional pluggable optical modules in large-scale data centers, saving substantial energy.

Computing Performance Scaling

Future Roadmap

The next-generation product after Rubin is named Feynman. It will feature the world’s first 1.6T CPO system using micro-ring resonator modulation technology. Compared to traditional pluggable optical modules used in large-scale data centers with megawatt-level power consumption, it will save a significant amount of electricity and energy.

For Paid Members, SemiVision Research will discuss topics on

Optical Interconnects & Co-Packaged Optics

Robotics and AI Development

Feynman (2028) and Future Technologies

Co-Packaged Optics (CPO) integration

Nvidia GTC 2025 Conference-Spectrum-X and Quantum-X

Tear down the specification of Quantum -X

Traditional Optical Modules —>CPO Optical Modules