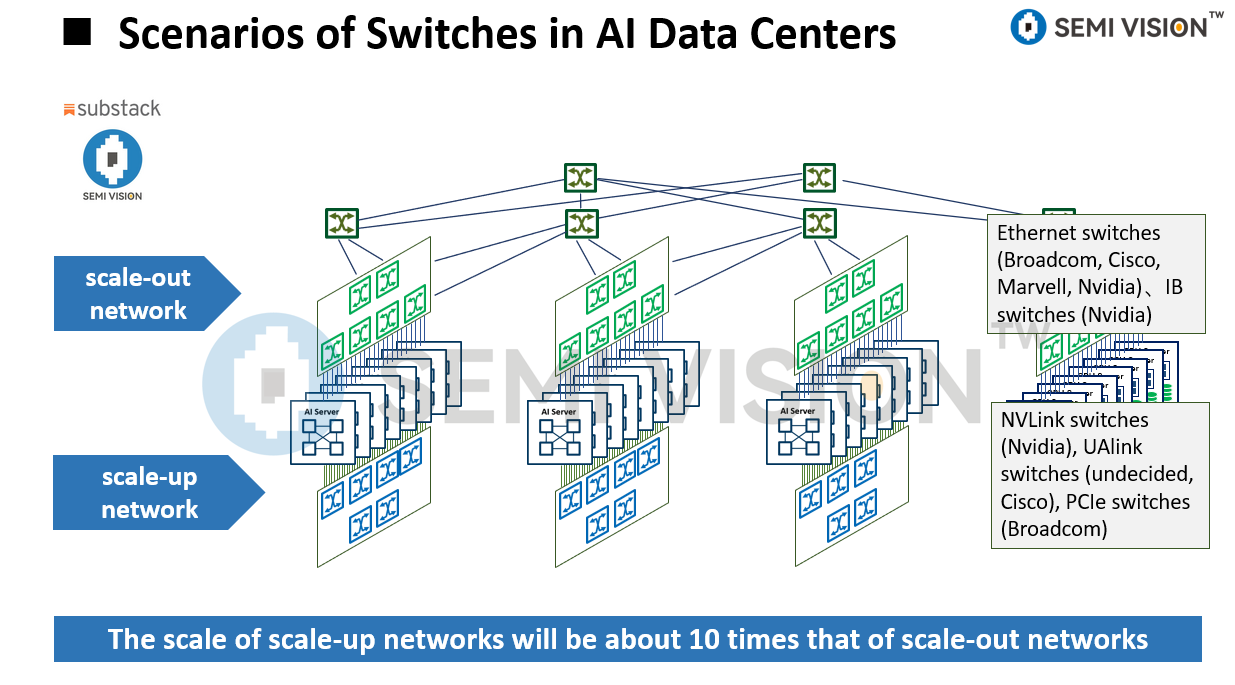

Scale Out vs. Scale Up: Understanding the Differences

Scale Out literally means expanding beyond size limitations, while Scale Up refers to increasing proportionally.

Scale Up enhances computing power by adding more processors to an existing system.

Scale Out increases computing capacity by adding independent servers to distribute workloads.

Scalability in Server Architectures

One crucial factor to consider in server architectures is scalability.

Unless a business never grows, increasing users will eventually push server performance and concurrency to their limits.

To address this challenge, there are generally two approaches:

Scale Out – representing distributed computing.

Scale Up – focusing on upgrading individual servers or chassis-based systems.

Scale Out (Horizontal Scaling)

Scale Out refers to adding multiple servers to meet increasing demand.

It relies on distributed computing, load balancing, and fault tolerance to improve performance and reliability.

This approach enhances scalability by allowing companies to expand server capacity as needed.

Scale Up (Vertical Scaling)

Scale Up involves upgrading a single large-scale server by adding more processing resources (e.g., CPUs, RAM).

This method improves application performance by enhancing the capabilities of existing infrastructure.

Both approaches have their advantages, and choosing between Scale Out and Scale Up depends on business requirements, performance needs, and cost considerations. Typically, we first Scale Up to enhance the performance of a single server to meet business demands. However, once the server reaches its performance limit, we then need to Scale Out to further meet the requirements.

With Broadcom’s 7th generation chip in switches, BW has increased by 80 X, but the total system power consumption has increased by 22 X:

The increase in SerDes and optical module (SerDes + DSP) power consumption is 3 times higher than the increase in logic chip power consumption.

As the SerDes speed in switches increases, the total link power consumption increases rapidly.

An increase in link speed not only means higher power consumption but also an increase in the technical difficulty of SI, and the challenge of technological advancement has also grown substantially.

CPO’s Advantage Over DSP-Based Optical Modules

CPO offers a significant advantage over traditional DSP-based pluggable optical modules by eliminating the need for a separate DSP. Instead, CPO integrates optical components directly with the ASIC, shifting the DSP functionality to the ASIC side. This integration reduces power consumption, improves efficiency, and enhances overall performance. By removing the DSP from the optical module, CPO minimizes latency and power loss associated with signal conversion, making it a more scalable and energy-efficient solution for high-speed data transmission.

Paid Subscribers Area will provide

Full CPO Switch summary table

Nvidia/Mellanox CPO Switch (Scale-out Network)

Broadcom CPO Switch – Bailly 51.2T

Marvell CPO Switch Solution