AI Is Not a Cycle—It Is a Structural Shift in Memory Economics

Original Article By SemiVision Reserach [Reading time:10 mins]

The Memory Industry Has Shifted from Cyclical to Structurally Constrained — AI Is the Core Driver

Recently, there has been a surge of discussion and analysis around the memory industry, particularly as AI reshapes the semiconductor landscape. From HBM supply constraints to structural shifts in DRAM and NAND, memory has moved to the center of strategic conversations across the entire AI value chain.

At SemiVision, we have also published our own perspective on these developments. In this article, we outline how the memory market is transitioning from a cyclical model to a structurally constrained environment, why AI-driven bandwidth demand is redefining capacity allocation, and where durable value is likely to concentrate across the supply chain. We hope our analysis provides useful context as industry participants navigate this new phase of the memory cycle.

The global memory industry is undergoing a fundamental transition. What was once a highly cyclical business—defined by alternating periods of oversupply and shortage—is increasingly becoming a structurally supply-constrained market, with AI as the primary driving force.

At the heart of AI’s real-world scaling limits lie two hard constraints: compute capacity and memory bandwidth. While advances in GPUs and accelerators continue at a rapid pace, memory bandwidth—rather than raw compute—has emerged as the dominant bottleneck. High Bandwidth Memory (HBM) has therefore become a critical component in breaking AI performance ceilings.

However, HBM is not a memory product that can be scaled flexibly. Its production is constrained by cleanroom capacity, advanced packaging requirements, and highly complex manufacturing processes, resulting in extremely low supply elasticity. These constraints have effectively imposed a structural ceiling on the entire DRAM industry, fundamentally altering supply dynamics.

HBM Crowding-Out Effects Are Reshaping the DRAM Market

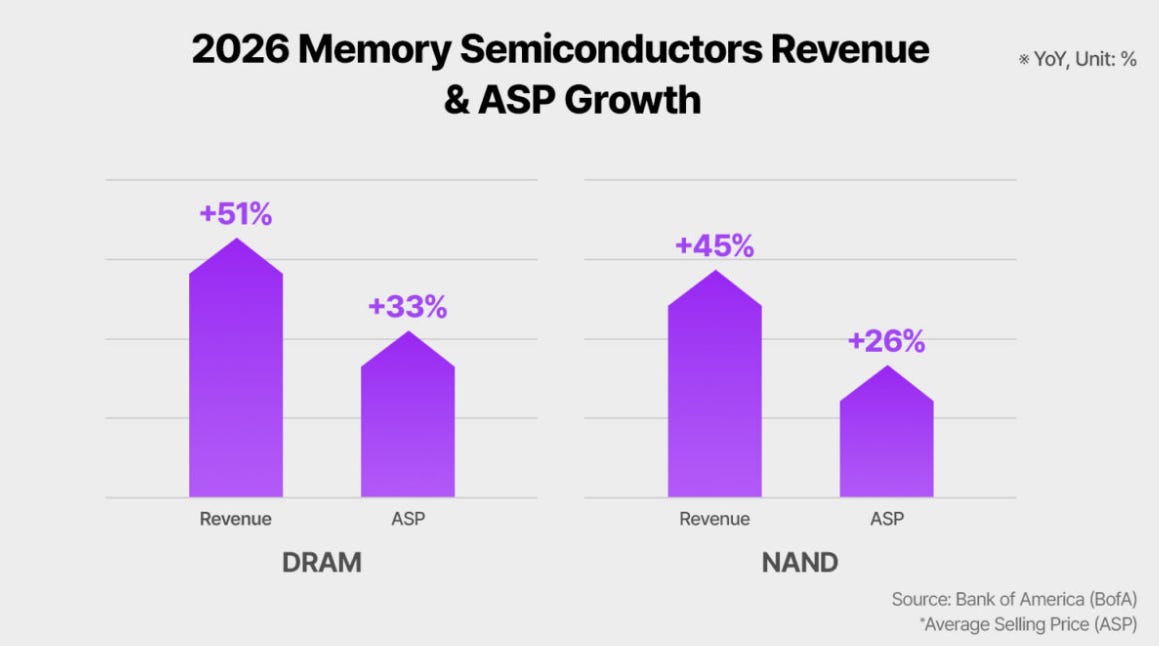

The rapid expansion of HBM capacity is creating a powerful crowding-out effect across the broader DRAM landscape. As memory manufacturers prioritize HBM and high-end products such as DDR5, capacity is being structurally reallocated away from legacy, commodity, and niche DRAM segments.

As a result, even non-HBM DRAM products are now facing tightening supply conditions. Mature-node memory, once governed by classic price cycles, is increasingly transitioning from a demand-driven pricing model to a supply-limited regime. The industry is no longer experiencing a temporary upswing; instead, it is adjusting to a new equilibrium where capacity expansion cannot keep pace with structurally higher demand.

This marks a decisive break from historical patterns, where memory oversupply would inevitably reemerge following price rallies.NAND Flash Enters a Structural Tightening Phase

A similar transformation is underway in the NAND Flash market. Following aggressive production cuts by major suppliers, global NAND supply has tightened significantly. At the same time, AI-driven demand for enterprise SSDs—particularly in data centers and AI training infrastructure—has surged.

The combination of deliberate supply discipline and structural demand growth has pushed the NAND market into a sustained undersupply environment, driving continued price recovery. Current trends suggest that above-trend growth in the memory market could persist through 2026, supported by AI infrastructure build-outs rather than short-term inventory cycles.

Investment Focus Has Shifted from “Recovery” to “Durability”

After a sharp rebound in both memory pricing and equity valuations, the core investment question has evolved. The market is no longer debating whether the memory industry has recovered; instead, attention has shifted to profit visibility, sustainability, and valuation differentiation.

In this environment, broad-based exposure to the memory sector is no longer optimal. The investment focus is increasingly selective, favoring companies with:

Specialized or niche DRAM portfolios

Strength in mature-node memory with structurally constrained supply

Stable, long-term customer relationships, particularly in enterprise and AI infrastructure

At the supply-chain level, value accrues disproportionately to segments closest to the compute bottleneck. High-end advanced packaging, memory testing, and enterprise-grade memory modules—where qualification barriers are high and demand is tightly linked to AI system deployment—are emerging as structurally advantaged positions.

The memory industry is no longer merely rebounding from a downturn; it is being redefined by AI-driven structural constraints. HBM has become the keystone that links compute scaling to memory supply limits, reshaping DRAM and NAND markets alike. In this new paradigm, capacity scarcity—not cyclicality—sets the rules, and value increasingly concentrates where memory interfaces directly with AI compute bottlenecks.

Understanding this shift is essential—not only for assessing near-term earnings, but for identifying where durable value will be created in the AI era.

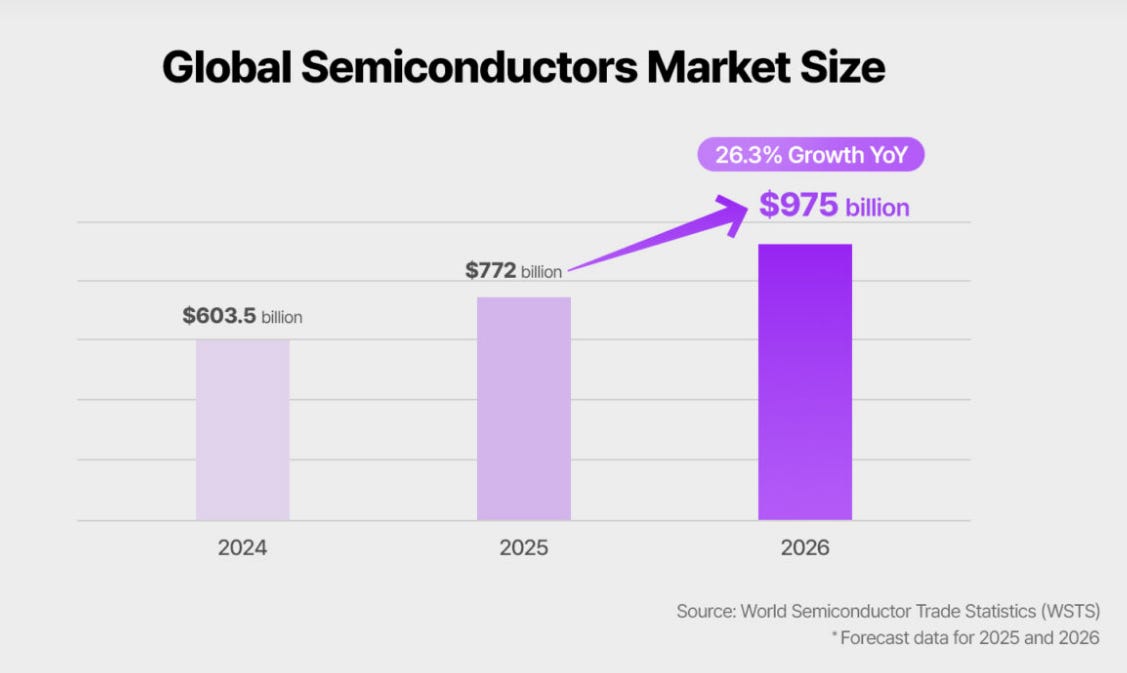

AI Remains the Core Growth Engine in 2026, but Overall Semiconductor Growth Moderates

Looking ahead to 2026, the global semiconductor industry is expected to enter a phase of AI-led but decelerating growth, shaped by both macroeconomic normalization and structural shifts in end-market demand. According to forecasts from market report, global GDP growth is projected to slow from 3.4% in 2024 to approximately 2.9% in both 2025 and 2026, signaling a less supportive macro backdrop for cyclical industries.

Semiconductors, as foundational components of the electronics supply chain, sit upstream of consumer and industrial demand. Historically, when economic conditions are strong, downstream sectors—such as smartphones, PCs, automotive electronics, and industrial equipment—drive higher semiconductor shipments. Conversely, macro softening typically translates into slower volume growth. This dynamic is increasingly visible as most consumer electronics categories remain either flat or grow only modestly.

Against this backdrop, AI infrastructure stands out as the primary growth pillar. Global semiconductor revenue is forecast to grow by approximately 11.9% year-on-year in 2026, a solid expansion but notably slower than the explosive growth seen over the past two years. AI servers—after experiencing an unprecedented surge driven by large-scale data center buildouts—are expected to continue growing, but at a more measured pace as capacity digestion begins.

The key structural change lies in growth concentration. While AI-related demand remains strong, it is no longer sufficient to fully offset softness across broader electronics markets. As a result, the industry is transitioning from a broad-based recovery to a narrower, AI-dominated growth profile. This shift increases earnings dispersion across the semiconductor value chain, favoring companies with deep exposure to AI compute, advanced packaging, high-bandwidth memory, and high-performance networking.

In summary, 2026 will not mark the end of AI-driven growth, but rather a transition into a more mature phase. The semiconductor industry remains structurally supported by AI, yet overall growth rates are normalizing as macro conditions cool and expansion becomes increasingly selective rather than universal.

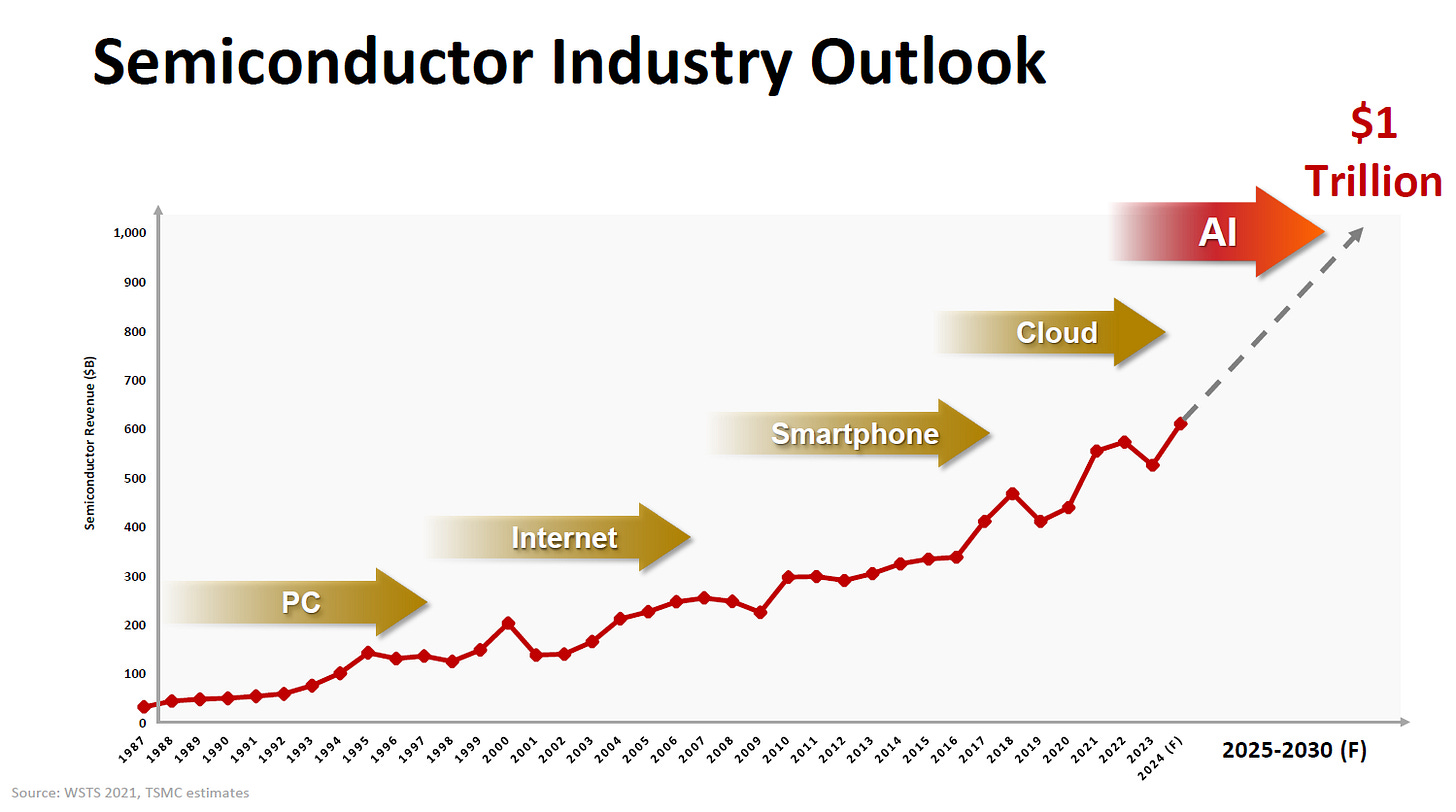

AI Semiconductors Emerge as the Critical Growth Engine

AI-related semiconductors have become the most important structural driver of growth in the global semiconductor industry. Demand for AI chips is expanding at a pace far exceeding that of the broader market, positioning AI as the core engine of semiconductor expansion over the next five years.

This growth is driven not only by large-scale AI server deployments, but also by the rapid commercialization and widespread deployment of AI models across multiple end markets. As AI workloads move from training to inference, demand is increasingly extending beyond centralized data centers into edge devices, smartphones, PCs, and embedded systems. Inference-related demand—characterized by high volume and broad deployment—is expected to become a major and more durable growth contributor.

While year-on-year growth rates are projected to gradually moderate as the market base expands, absolute market size continues to rise steadily. This reflects a transition from an early explosive phase to a more sustainable, structurally supported growth trajectory. AI is no longer a single product cycle but a platform-level transformation affecting compute architectures, memory, networking, and system design.

Importantly, the depth of AI adoption continues to increase. Use cases are becoming more complex, models larger and more integrated into real-world workflows, and performance requirements more demanding. As a result, AI semiconductor demand is expected to remain resilient even as broader electronics markets face cyclical softness.

AI semiconductors have shifted from a high-growth niche to a foundational pillar of the semiconductor industry, reshaping long-term growth dynamics and value distribution across the supply chain.

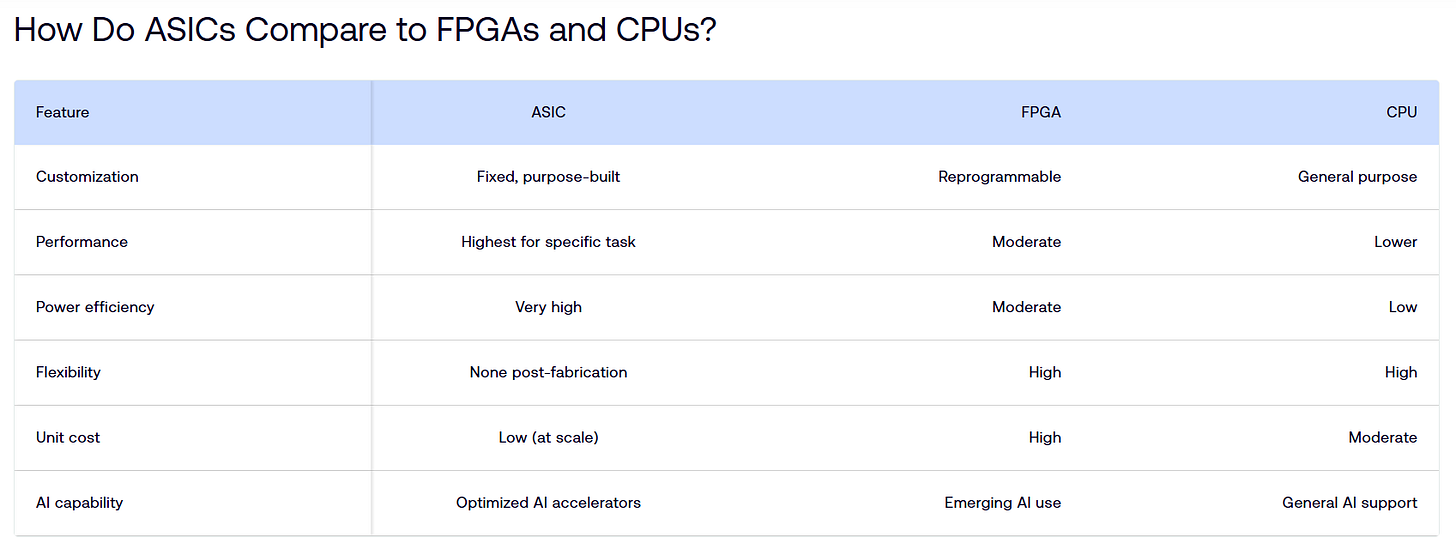

AI ASICs Are Set to Deliver the Fastest Growth in the AI Semiconductor Market

Among all AI semiconductor categories, AI ASICs and non-GPU accelerators are projected to deliver the highest growth rates over the 2024–2029 period, reshaping the competitive landscape of AI hardware. While GPUs remain the largest and most established segment, with a projected CAGR of approximately 22%, growth momentum is increasingly shifting toward custom and application-specific silicon.

Non-GPU AI accelerators are forecast to grow at a 55.5% CAGR, reflecting rising demand from hyperscalers and cloud service providers seeking optimized performance, lower power consumption, and tighter system integration. Microprocessors used in AI workloads are also expected to grow rapidly, with a projected 44.1% CAGR, driven by the expansion of inference at the edge and within enterprise systems. In contrast, integrated baseband and application processors show more moderate growth of around 10.3%, reflecting their exposure to mature consumer markets.

This divergence in growth rates highlights a structural shift: AI workloads are becoming more diverse, and no single architecture can efficiently serve all use cases. As a result, custom ASICs tailored to specific models, workloads, and deployment environments are gaining strategic importance.

By 2029, the global AI semiconductor market is expected to expand from approximately USD 138.8 billion in 2024 to USD 438.5 billion, with GPUs still dominant but accounting for a smaller share of total growth. The fastest value creation will increasingly occur in specialized accelerators, advanced packaging, and system-level optimization, where differentiation and customer lock-in are strongest.

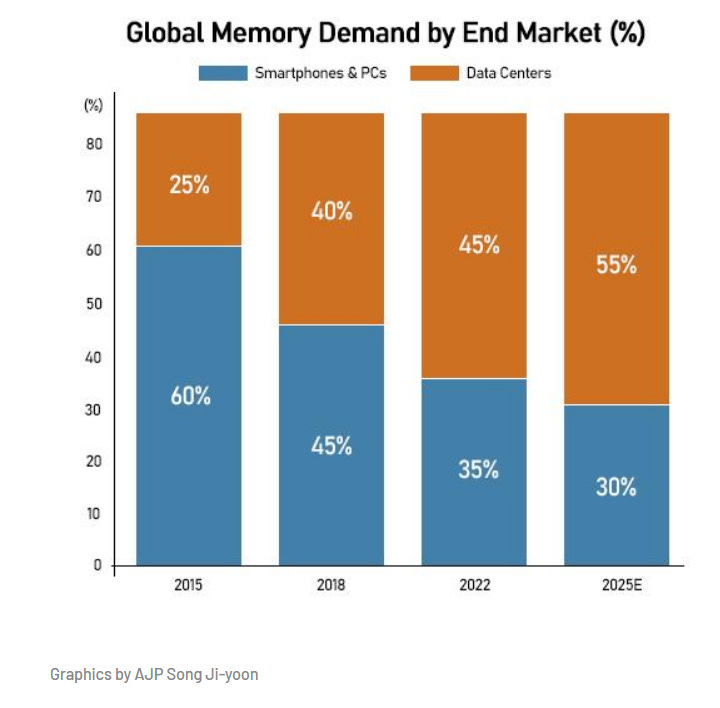

Servers Are Becoming the Primary Engine of DRAM Demand

Servers and data centers have emerged as the dominant growth driver for global DRAM demand, driven by the rapid expansion of AI workloads. In 2023, servers and data centers accounted for nearly 40% of total DRAM bit shipments, already approaching the share historically held by mobile devices. This marked a structural shift in the DRAM consumption mix.

The momentum is accelerating. As AI adoption scales, server-related applications are projected to account for approximately 66% of global DRAM output by 2026, growing at a pace significantly faster than traditional end markets. Importantly, this surge is not limited to High Bandwidth Memory (HBM) alone. While HBM remains the most visible beneficiary, AI servers are driving broader spillover demand across the DRAM ecosystem.

Beyond HBM and DDR5 used in mainstream servers, demand for LPDDR is expanding in edge AI systems and specialized architectures that prioritize power efficiency. At the same time, graphics DRAM consumption is increasing as AI workloads require higher memory capacity and bandwidth for visualization, model development, and inference acceleration.

In contrast, DRAM demand from PCs and mobile devices remains comparatively stable, reflecting slower replacement cycles and more mature markets. As a result, the DRAM industry is transitioning from a consumer-driven model to a server-centric demand structure, where capacity allocation, pricing power, and long-term profitability are increasingly shaped by AI infrastructure investment.

This structural rebalancing is reshaping DRAM supply strategies and reinforcing AI servers as the central pillar of memory growth over the next several years.

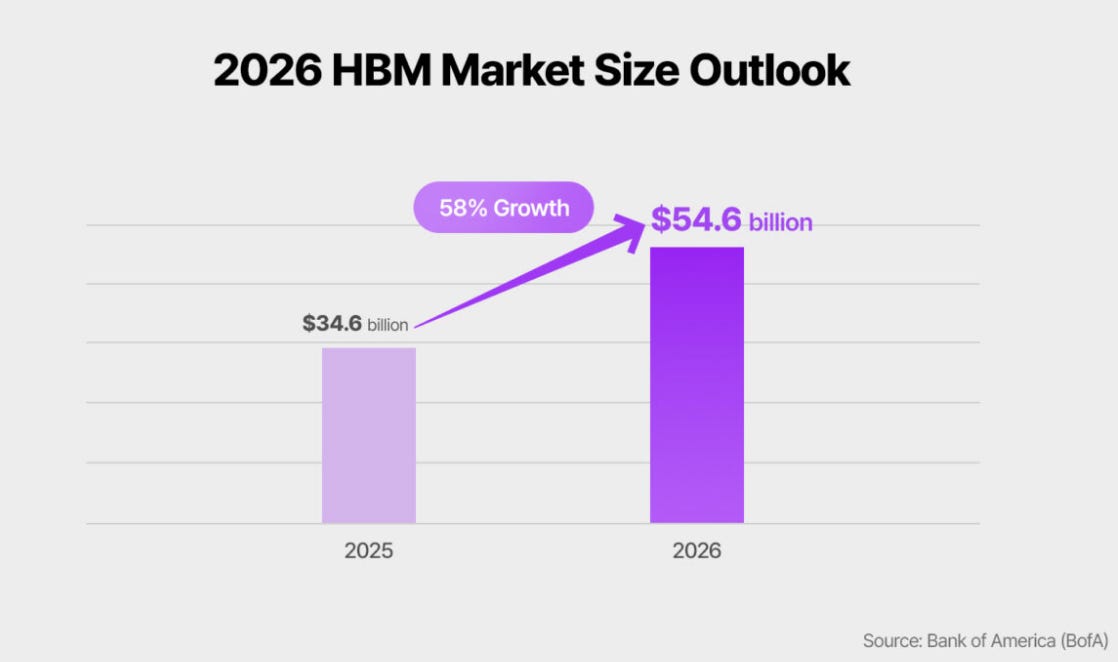

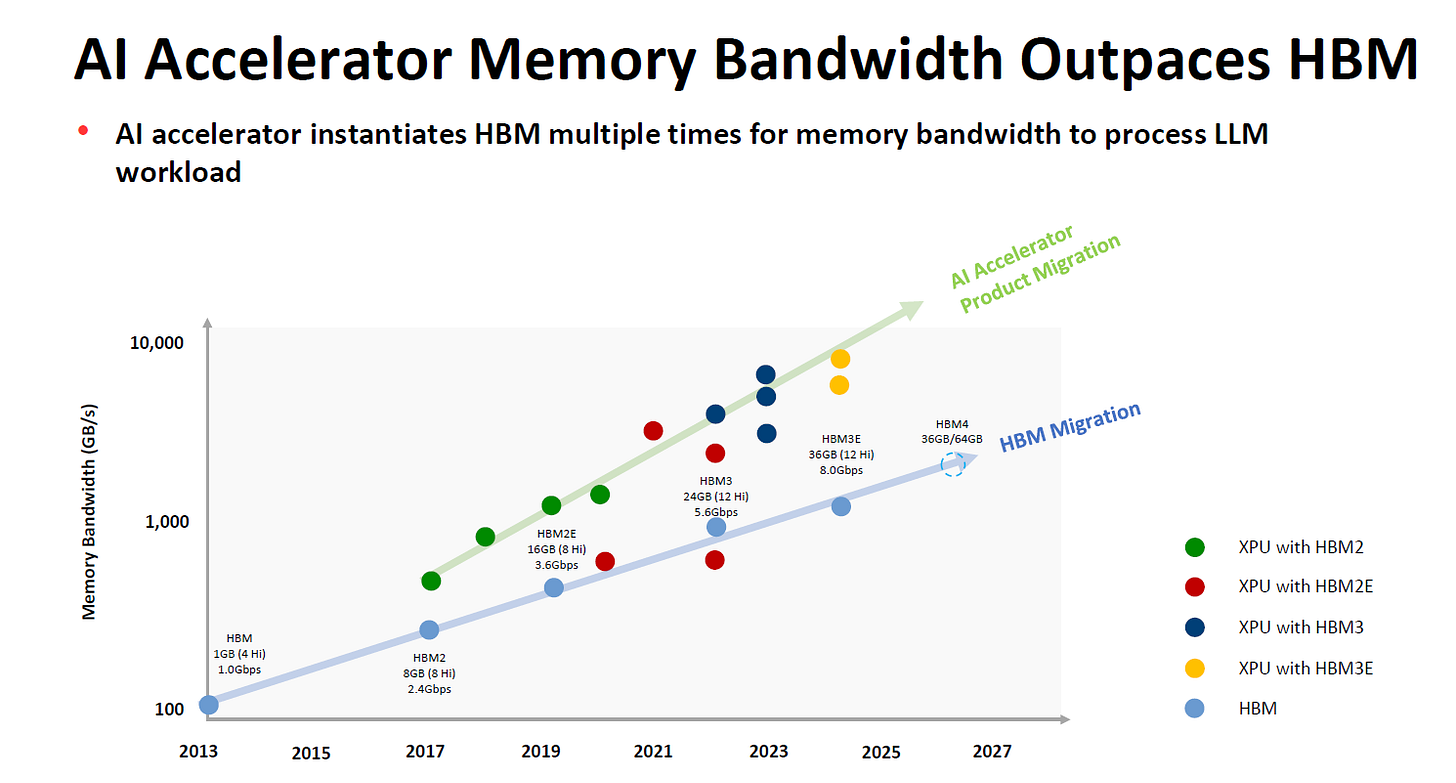

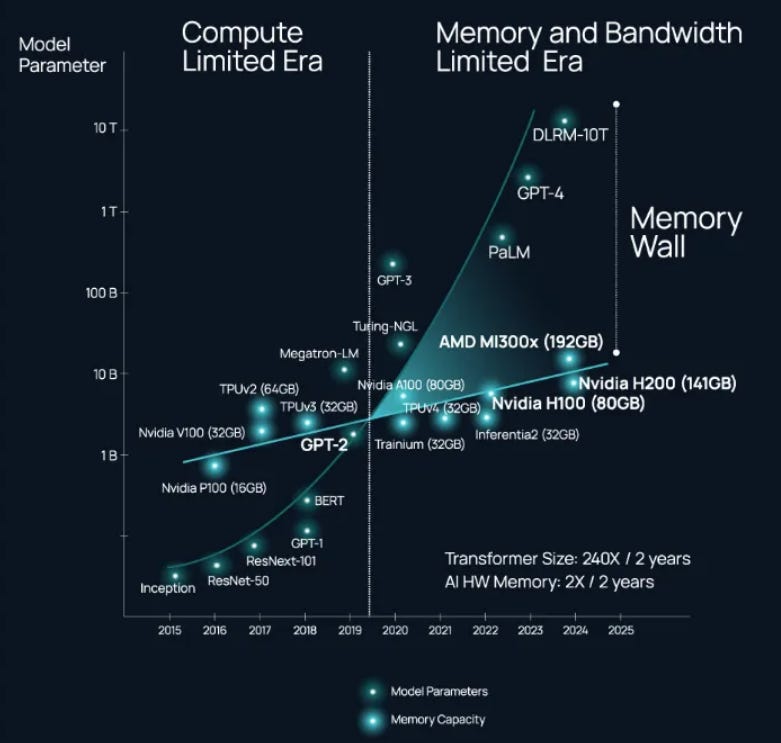

AI Is Driving an Explosion in HBM Demand

As AI models—particularly large language models—continue to scale rapidly, traditional memory architectures are struggling to keep pace with the growth in compute performance. Conventional memory such as DDR DRAM can no longer deliver sufficient bandwidth or access speed to fully utilize modern AI processors, including GPUs and other accelerators. This widening mismatch has created a fundamental bottleneck known as the “Memory Wall.”

While compute capability has advanced at an exponential rate, memory bandwidth and latency improvements have progressed far more slowly. As a result, AI processors increasingly spend idle cycles waiting for data rather than performing computation. This shift marks a transition from a compute-limited era to a memory- and bandwidth-limited era, where system performance is constrained by data movement rather than raw processing power.

The Memory Wall has forced the industry to pursue more efficient memory solutions, and HBM has emerged as the most effective response. By stacking DRAM dies vertically and integrating them closely with processors via advanced packaging, HBM delivers dramatically higher bandwidth and lower energy per bit compared with conventional DDR memory. These characteristics make HBM uniquely suited to feeding data-hungry AI workloads at scale.

As a result, demand for HBM is experiencing explosive growth. HBM has moved from a niche technology to a core enabler of AI performance, driving not only higher memory content per accelerator but also reshaping capacity allocation across the entire DRAM industry. In the AI era, memory bandwidth is no longer a supporting feature—it is a strategic constraint, and HBM sits at the center of the industry’s effort to break through it.

From Cyclical Recovery to Structural Constraint: Positioning for Durable Value in the AI Era

In conclusion, the semiconductor and memory industries are no longer operating under traditional cyclical rules. AI has fundamentally altered the demand structure, shifting value creation toward bandwidth, advanced packaging, and system-level optimization. Memory is no longer a passive component in computing architectures—it has become a strategic constraint that defines performance ceilings. HBM, AI ASICs, and server-centric DRAM demand illustrate how supply limitations, rather than excess capacity, now shape pricing power and profitability.

While overall semiconductor growth may moderate as macro conditions normalize, AI-driven infrastructure investment continues to anchor long-term expansion. The key for investors and industry participants is no longer timing the cycle, but identifying structural positioning: who controls bandwidth, who enables compute efficiency, and who sits closest to the AI bottleneck.

The next phase of growth will be more selective, more capital-intensive, and more architecture-driven. In this environment, durable advantage will belong to companies aligned with AI’s core constraints—not merely its surface-level growth.