Nvidia GTC AI Conference Preview: GB300, CPO switches, and NVL288

Original Article by SemiVision Research

The GTC Conference is set to return to San Jose from March 17 to 21, 2025, bringing together thousands of developers, innovation leaders, and business executives. Attendees will explore how AI and accelerated computing are helping humanity tackle its most complex challenges.

NVIDIA CEO Jensen Huang's GTC Keynote

NVIDIA CEO Jensen Huang will deliver a keynote at GTC, addressing key topics such as:

AI agents

Robotics technology

The future of accelerated computing

His presentation is expected to provide insights into the evolution of AI, robotics, and next-generation computing advancements.

As speculation grows about what NVIDIA will unveil at the 2025 GTC, expectations are high for the announcement of GB300, CPO switches, and NVL288.

GB300 Overview

The B300 GPU is expected to deliver 50% more FLOPS than its predecessor, B200. Key specifications include:

CX8 with 2× network bandwidth

CPO-version switch

GPU SXM module

Full liquid cooling, featuring enhanced cold plate modules and NVQD

Increased power shelf capacity from 66kW to 90kW

NVIDIA’s Chip Development Roadmap

NVIDIA’s chip architecture is evolving along three major platforms:

Hopper Platform (Current)

Blackwell Platform (Current)

Future Rubin Platform (Upcoming)

The development cycle follows an annual cadence, adapting to data center scalability and technical constraints, requiring a new architecture each year.

NVIDIA's Rubin series marks a significant shift, as most of its chip designs will transition to the 3nm process. This move aligns with next-generation AI and HPC demands, improving power efficiency, performance, and scalability.

Key Takeaways:

Rubin chips will fully adopt 3nm process technology

Higher transistor density for improved AI workload efficiency

Better power efficiency, crucial for large-scale data centers

Alignment with NVIDIA’s roadmap for annual architectural upgrades

According to Semivision’s analysis, the following chart summarizes NVIDIA’s Rubin chip design transition to 3nm:

We process and generate a table or chart based on this information for better visualization.

Nvidia Chip Design Roadmap

Scale-Out and the Importance of CPO Technology in AI Clusters

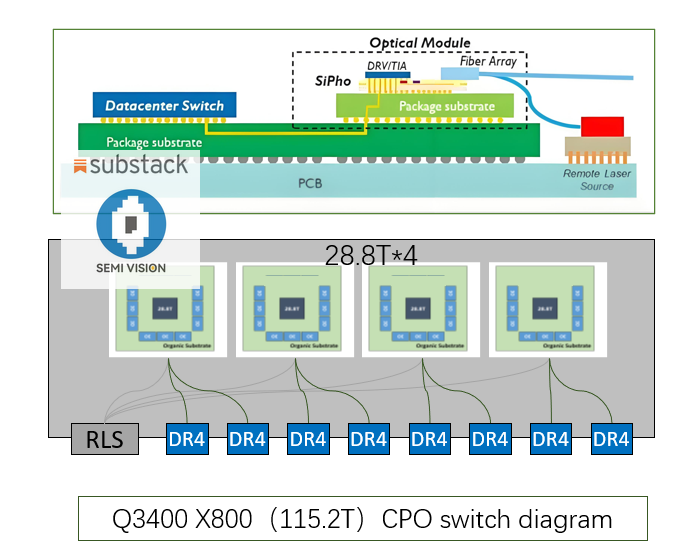

As AI clusters continue to expand, Scale-Out plays a crucial role in overcoming bandwidth limitations. To push the boundaries of data transfer efficiency, technological advancements become essential. This is where Silicon Photonics comes into play, driving discussions around Co-Packaged Optics (CPO) technology.

What is CPO Technology?

CPO aims to achieve electro-optical integration through advanced packaging techniques. This approach integrates optical components directly within the switch package, significantly reducing power consumption, latency, and bandwidth constraints.

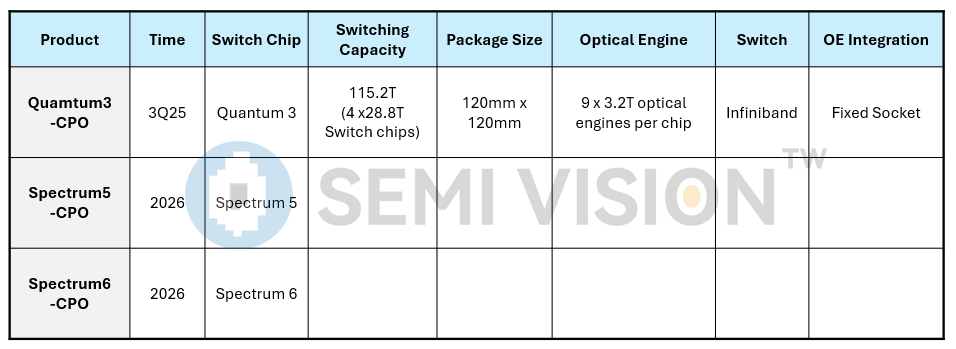

Nvidia's CPO Product Lineup

Nvidia's CPO product portfolio includes IB (InfiniBand) and Spectrum switches, featuring the following key models:

Quantum 3400 X800 InfiniBand Switch

Spectrum 5 X800 Ethernet Switch

Spectrum 6 X800 Ethernet Switch

Production Timeline

Quantum 3400 CPO switch → Scheduled for mass production in July 2025

Spectrum 5 CPO switch → Expected to enter mass production in December 2025

As CPO technology gains traction, it is set to revolutionize AI infrastructure, enabling higher bandwidth, lower power consumption, and more scalable AI clusters.

Quantum 3400 CPO Switch Overview

Total Switching Capacity: 115.2T

Chiplet Design:

4× 28.8T switch chips

Each 28.8T switch chip consists of 6 chiplets

Each chiplet consists of 3 small dies

Each small die has a switching capacity of 1.6T

Final Computation: 1.6T × 3 × 6 = 28.8T per chip

Optical Engine Integration:

9× 3.2T optical engines surrounding the package

Each optical engine connects to fibers via FAU

Each optical engine has 32 optical waveguide channels, each coupled with one fiber

Since current fiber technology uses 100G per fiber, each 3.2T optical engine requires 32 optical waveguides

PCB Architecture:

Each PCB holds 2× 28.8T switch chips

Two PCBs stacked vertically within a switch, achieving a compact form factor

Spectrum 5 & Spectrum 6 CPO Switches

Nvidia’s next-generation Ethernet CPO switches follow a similar chiplet-based design:

Spectrum 5 CPO Switch

Total Switching Capacity: 204.8T

4× 51.2T switch chips

Optical Engine Setup: 16× 3.2T optical engines per chip

Spectrum 6 CPO Switch

Total Switching Capacity: 409.6T

4× 102.4T switch chips

Optical Engine Setup: 16× 6.4T optical engines per chip

Packaging Technology

Both Spectrum 5 and Spectrum 6 CPO switches adopt CoWoS-S packaging

Why is the Package Size Larger for the Infiniband Switch Compared to the Ethernet Switch?

The key reason for the larger package size in Nvidia’s Quantum 3400 InfiniBand switch compared to the Spectrum 5/6 Ethernet switches lies in the evolution of optical engine integration.

Pluggable optical engines reduce repair complexity

Lower maintenance costs and downtime for Spectrum 5/6 switches

Quantum 3400's fixed design was a transitional solution before the pluggable upgrade

As optical interconnect technology matures, hot-swappable optical engines will likely become the standard in future high-performance networking hardware.

Nvidia's CPO Product Lineup

Beyond CPO switches, we expect OIO (Optical I/O) to begin deployment in 2027, aligning with TSMC’s CPO roadmap.

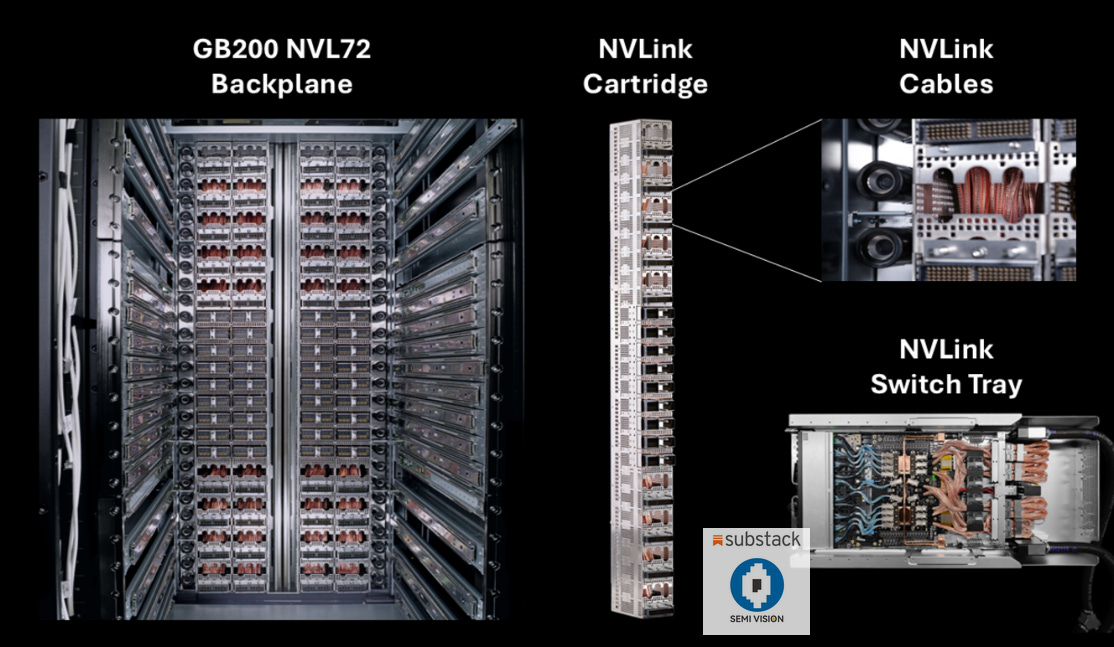

NVL288: In-Rack Connectivity

With the surging demand for inference workloads, we anticipate that Nvidia will introduce additional rack configurations beyond the current NVL72, aiming to enhance performance and spatial efficiency.

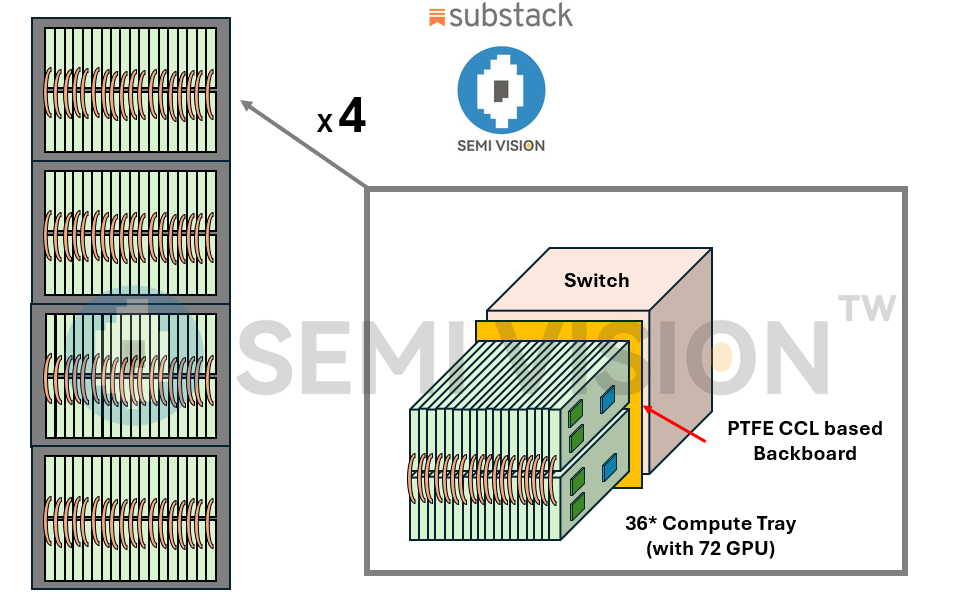

We expect the NVL288 configuration to debut alongside the next-generation Rubin architecture, where four NVL72 units will be interconnected via rear-end cables to form a NVL288 rack.

Each NVL72 contains 36 compute trays (equipped with I/O, NVLink interfaces, 1 CPU, and 2 GPUs).

These compute trays are orthogonally interconnected with NVSwitch trays.

Each NVL72 includes 6 NVSwitch trays, ensuring seamless in-rack connectivity.

PTFE CCL Backplane Solution

We anticipate that Nvidia will transition to a PTFE CCL-based backplane solution, replacing the traditional cable tray, which suffers from low yield rates and inefficient maintenance.

PTFE CCL offers:

Ultra-low dielectric loss, ensuring stable high-frequency performance

Minimized energy dissipation, improving overall system efficiency

We expect Nvidia to showcase NVL288 during the GTC conference, highlighting its advancements in rack-scale AI infrastructure.

In the next article, we will delve into Nvidia's CPO supply chain, covering its key suppliers, technology partners, and the manufacturing and packaging ecosystem of CPO.

Related Semi Vision CPO Article:

How to Distinguish Between CPO and OIO? What Is Their Fundamental Difference?

TSMC and NVIDIA Pioneering the Future of AI with Silicon Photonics Technology

Paid Subscribers Area will provide full table of

Nvidia Chip Design Roadmap

Nvidia's CPO Product Lineup