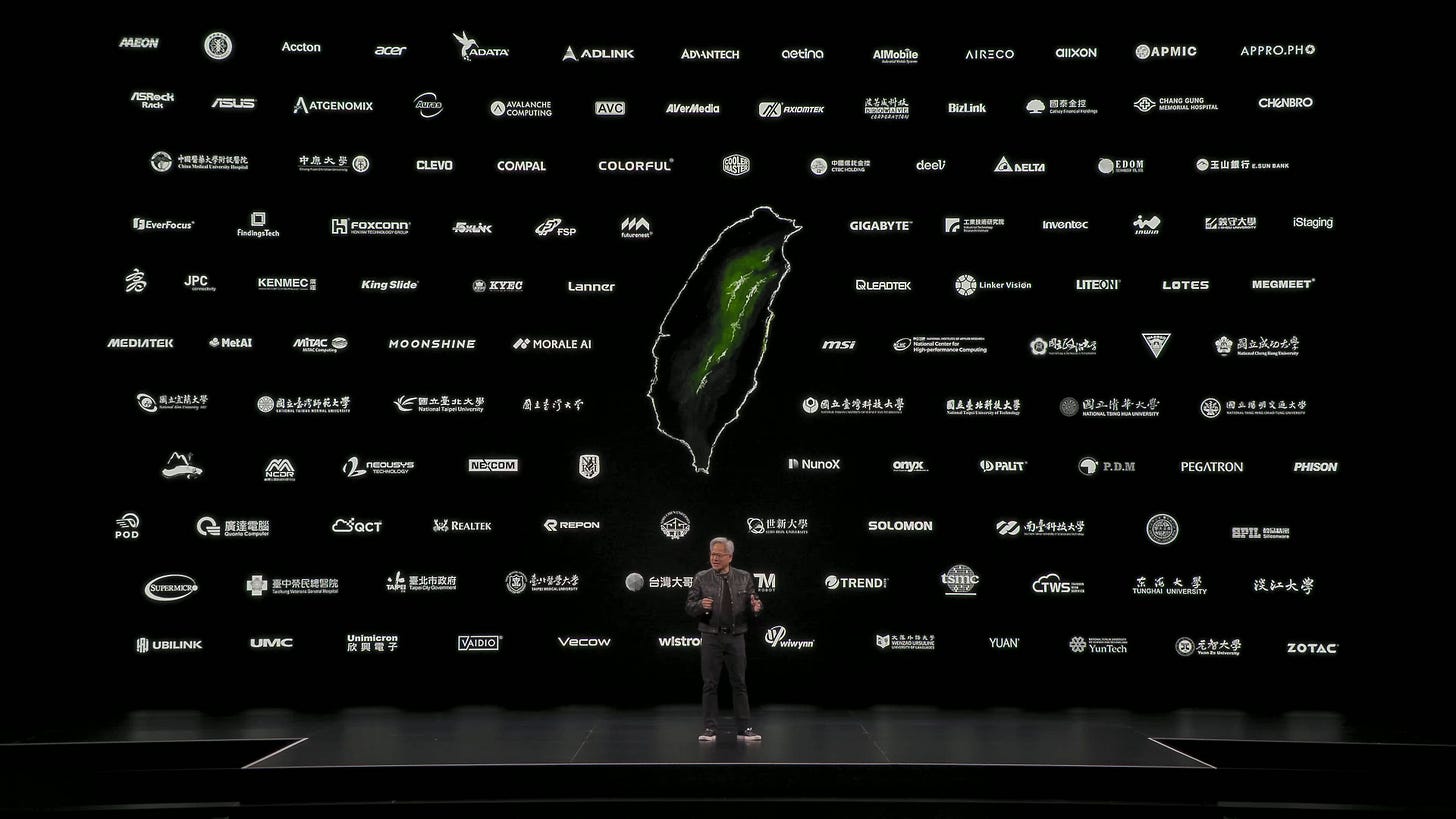

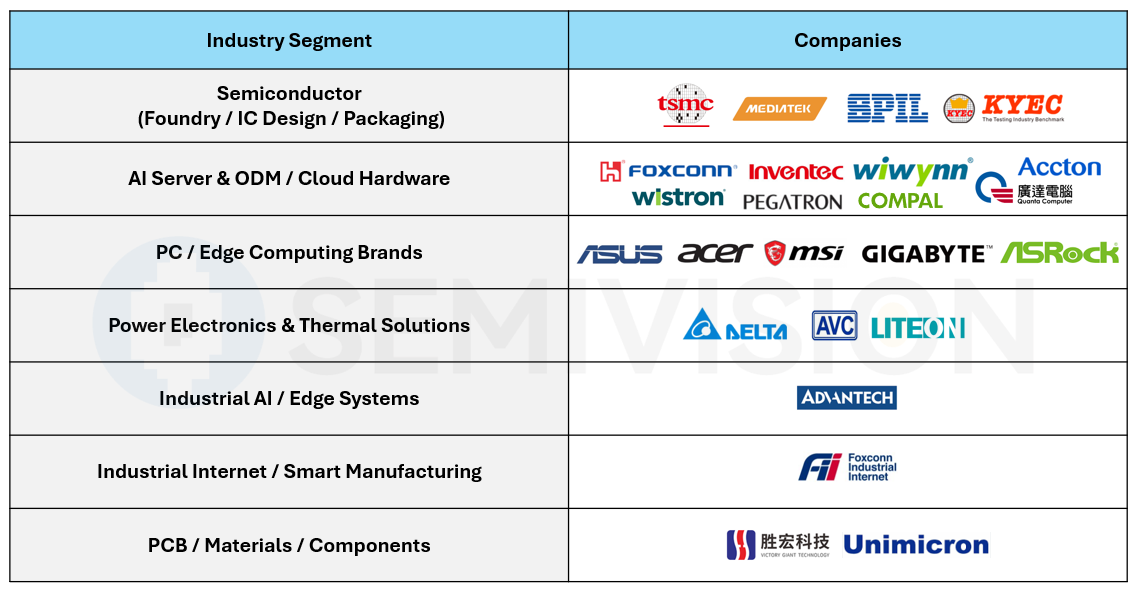

Inside the “Trillion-Dollar Dinner”: Who Sat at NVIDIA’s Taiwan Power Table?

Original Article By SemiVision Research (NVIDIA, TSMC, SPIL, MediaTek, SPIL, Foxconn, Wistron, Delta, AVC)

Today’s “Trillion-Dollar Dinner” continued the long-standing tradition of the “same place, same core group,” once again held at Jensen Huang’s favorite restaurant, Brick Kiln Old-Style Taiwanese Cuisine — marking the fourth time he has hosted supply-chain partners at this venue.

The restaurant specializes in traditional Taiwanese “nostalgic” cuisine. Its exterior is built with red brick and roof tiles, while the interior displays vintage items such as Tatung Boy figurines, old HeySong soda metal signs, and classic Taiwanese toys, recreating the atmosphere of everyday life in Taiwan during the 1950s and 1960s.

For Huang, who was born in 1963, the setting and décor closely echo memories of his childhood growing up in Taiwan.

Food in Taiwan is remarkably affordable. For a group of 10 people, a full meal with 9 dishes, 1 soup, 1 dessert, and a serving of fruit typically costs only USD $200–$400 in total — making it an excellent value for the quality and variety offered.

Who Dined with Jensen Huang Tonight?

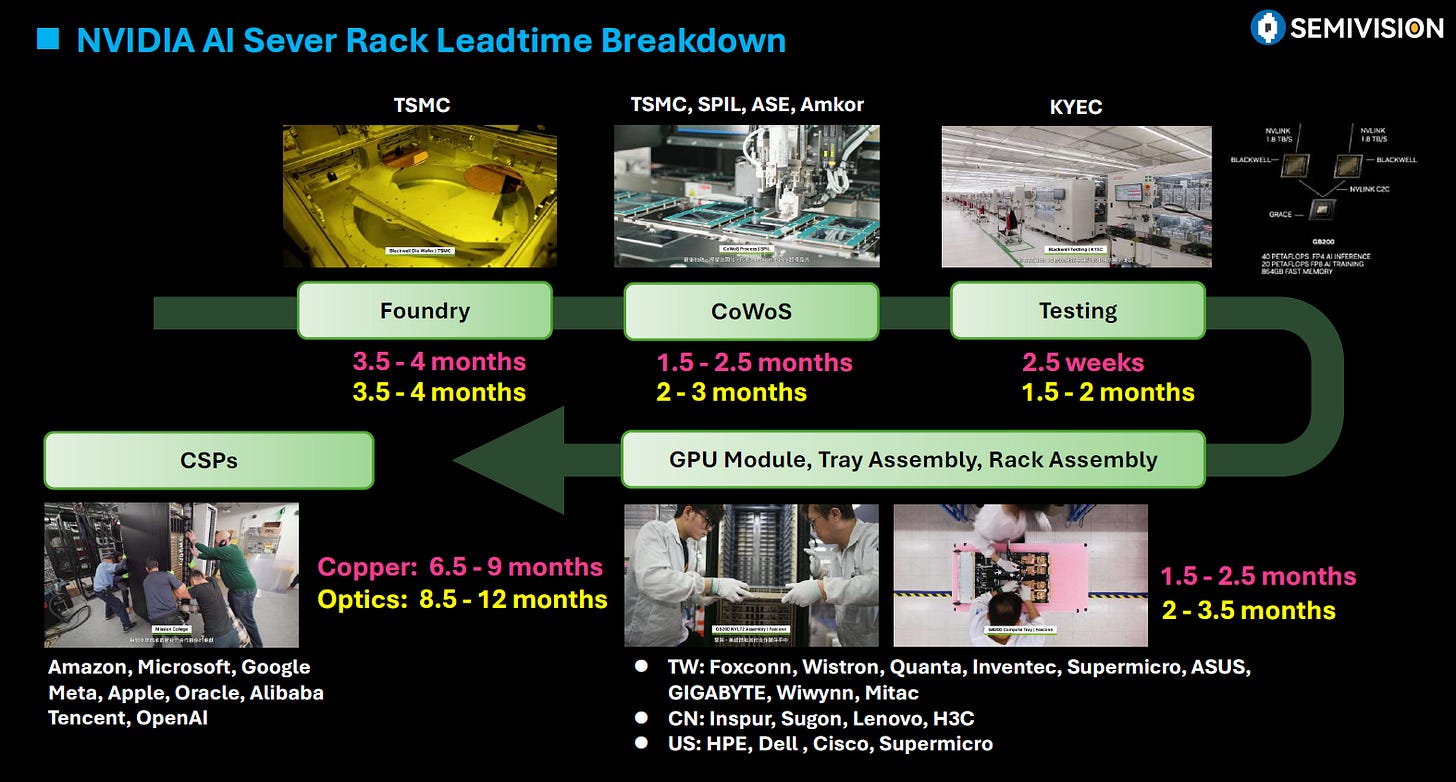

A Deep Look into NVIDIA’s Taiwan Supply Chain

On the third day of his Taiwan visit, Jensen Huang once again chose a place that feels like home — a nostalgic, old-style Taiwanese restaurant — but this gathering carried meaning far beyond a routine executive dinner; it was a symbolic convergence of the companies that quite literally build the physical foundation of the AI era.

Behind the familiar dishes, round tables, and warm, retro atmosphere sat the real engine of global AI infrastructure: NVIDIA’s Taiwan-centered supply chain. This was not a meeting about slides, strategy decks, or abstract roadmaps; it was a quiet acknowledgment that the AI revolution is ultimately constrained and enabled by manufacturing reality — wafers, substrates, advanced packaging, power systems, thermal solutions, and precision assembly.

To understand why this dinner matters, you don’t study the menu — you study the guest list, because each seat at the table represents a critical layer of the compute stack that transforms silicon designs into working AI supercomputers. In today’s environment, NVIDIA is no longer simply a chip designer; it operates as the gravitational core of a planet-scale computing ecosystem, orchestrating an industrial network that spans design, fabrication, packaging, system integration, and data center deployment.

Taiwan is where this digital ambition takes tangible form, where leading-edge nodes are manufactured, CoWoS capacity is expanded, boards are assembled, racks are integrated, and AI clusters become deployable infrastructure. The dinner therefore reflects a deeper structural truth: AI leadership is no longer defined solely by algorithms or model size, but by who can coordinate the most complex, capital-intensive, and tightly synchronized hardware supply chain in the world — and in that equation, this island is not peripheral, but central.

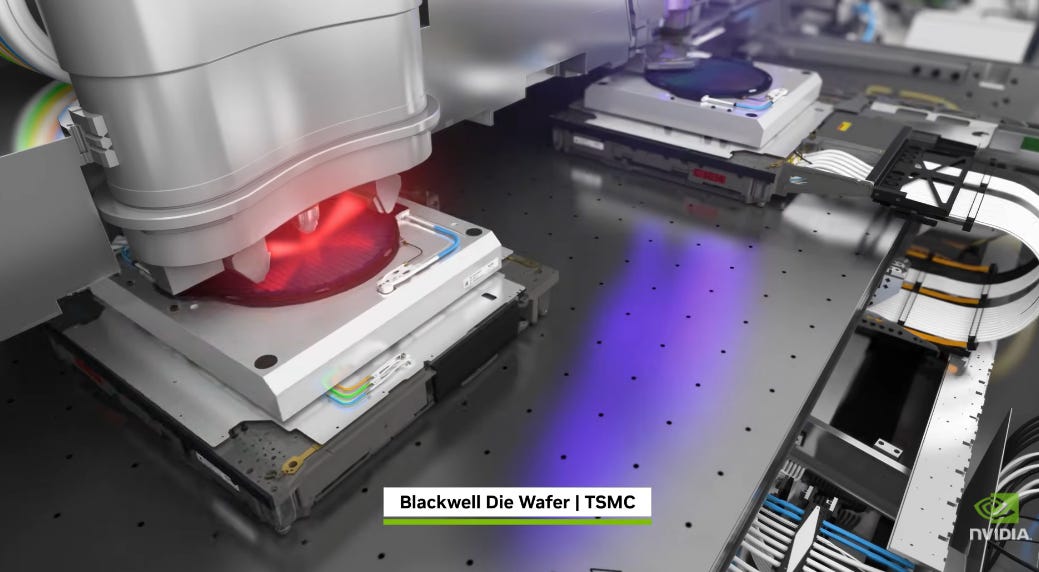

Nvidia is fundamentally a design powerhouse, creating the computational brains of modern AI systems through GPUs such as the H100, H200, and the Blackwell generation, but chip design is only the opening chapter in the journey from silicon blueprint to working AI infrastructure.

Transforming those processors into complete AI servers, GPU trays, racks, cooling architectures, power distribution networks, and fully deployable data center modules demands a manufacturing ecosystem with extraordinary depth, coordination, and speed — one capable of synchronizing advanced packaging, board-level integration, mechanical engineering, thermal solutions, and large-scale system assembly under relentless time-to-market pressure — and at this level of scale and execution, that ecosystem is Taiwan, the place where digital intelligence is translated into physical computing power.

SemiVision has compiled a list of the companies that attended tonight’s dinner.

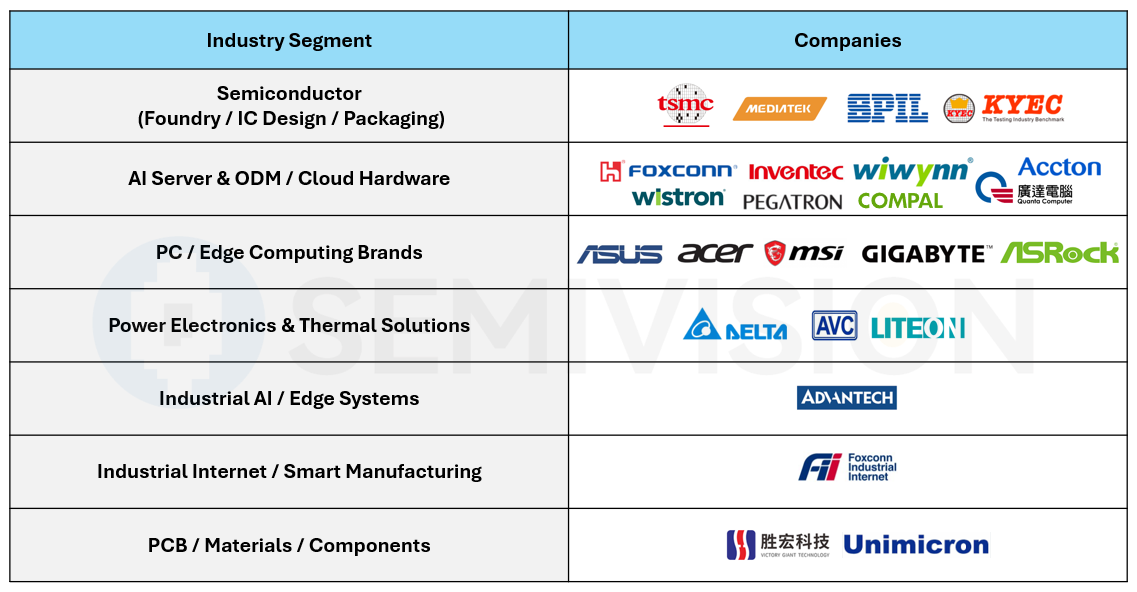

TSMC:

TSMC is the world’s most advanced pure-play semiconductor foundry and the manufacturing backbone of the modern AI era. The company produces leading-edge logic chips at nodes such as 5nm, 3nm, and moving toward 2nm and beyond, enabling the performance and power efficiency required for AI accelerators, high-performance CPUs, and advanced ASICs.

TSMC is also a central player in advanced packaging, particularly CoWoS, which integrates GPUs with HBM memory and is critical for AI server architectures. Its manufacturing model allows fabless companies to focus on design while leveraging TSMC’s process technology, yield optimization, and massive scale. For AI, TSMC is not just a chip maker — it is a system enabler. The physical limits of transistor density, interconnect scaling, and chip-to-chip integration are all pushed forward inside its fabs.

As AI models grow, so does dependence on TSMC’s ability to expand advanced capacity, making it one of the most strategically important companies in the global technology supply chain.

MediaTek:

MediaTek is a leading fabless semiconductor company known for SoCs across mobile, connectivity, and increasingly AI-focused computing platforms. While historically associated with smartphones, MediaTek’s strength lies in system integration, IP reuse, and cost-efficient high-volume design — capabilities that are increasingly valuable in the AI inference and edge computing era.

The company integrates CPUs, GPUs, NPUs, connectivity modems, and multimedia engines into highly optimized chips. Its growing AI presence includes edge AI processors, automotive platforms, and custom ASIC collaborations. MediaTek’s experience in power-efficient design is especially relevant as AI moves beyond data centers into devices, industrial systems, and distributed edge nodes.

In the broader AI ecosystem, MediaTek represents the “system SoC” model, where heterogeneous compute blocks are tightly integrated to deliver performance per watt. As inference demand scales, MediaTek’s role could expand from consumer electronics into AI-optimized silicon platforms that balance performance, integration, and cost — a critical formula for mass adoption of AI hardware.

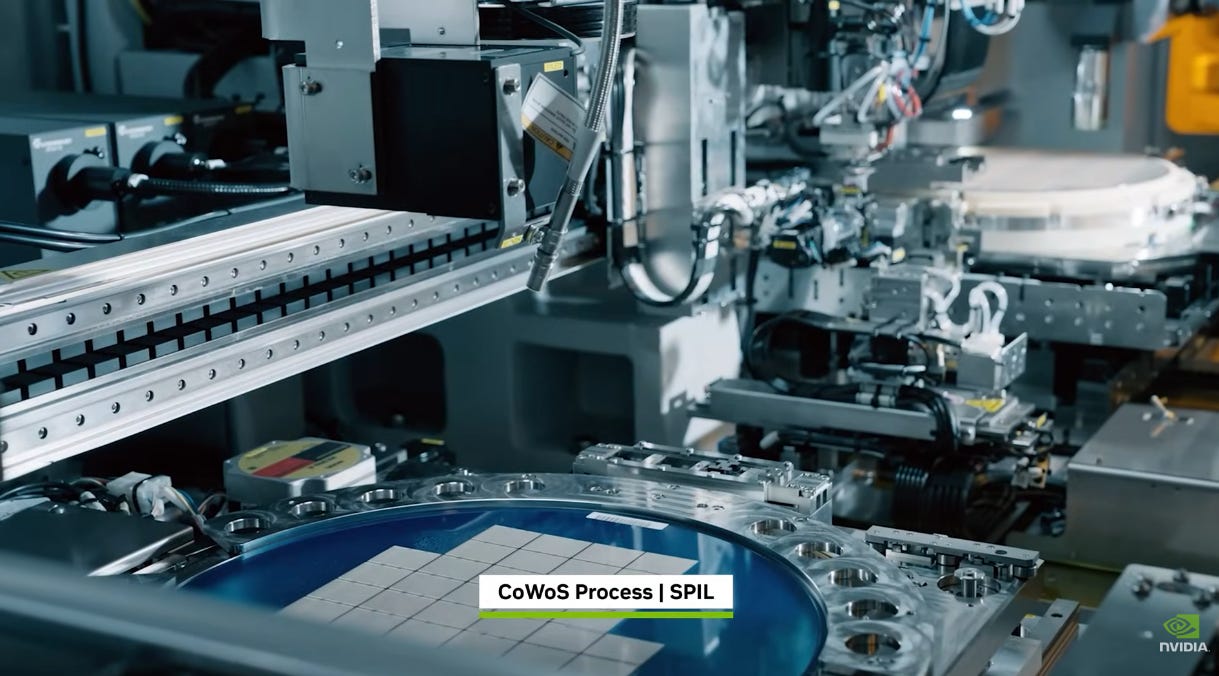

SPIL:

SPIL is a major semiconductor packaging and testing company and part of the advanced packaging backbone supporting modern AI chips. As chip complexity grows, packaging has shifted from a back-end process to a system-level engineering discipline.

SPIL provides flip-chip, wafer-level packaging, and advanced system-in-package solutions that enable high I/O density and signal integrity. In AI hardware, packaging determines thermal performance, power delivery, and interconnect bandwidth between logic dies and memory. SPIL’s expertise allows chip designers to move beyond monolithic die limits toward heterogeneous integration. This is particularly important as AI processors demand higher pin counts, faster interfaces, and tighter form-factor constraints.

By bridging the gap between wafer fabrication and system assembly, SPIL plays a crucial role in transforming silicon designs into reliable, high-performance components ready for server deployment.

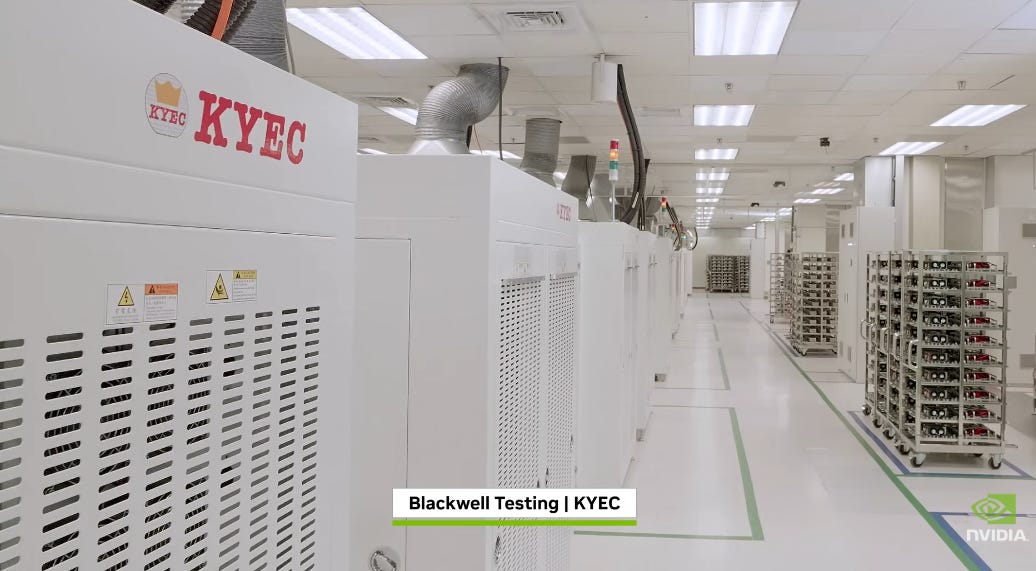

KYEC:

KYEC is a leading independent semiconductor testing and back-end service provider based in Taiwan, playing a crucial role in the global chip supply chain. The company specializes in wafer probing, final testing, burn-in, and reliability services for a wide range of semiconductor products, including logic devices, memory, mixed-signal ICs, and increasingly AI- and high-performance computing-related chips. As chip complexity and performance demands rise, advanced testing has become a strategic bottleneck, and KYEC’s capabilities help ensure yield, quality, and long-term reliability before devices enter system integration.

KYEC serves major fabless companies, IDMs, and foundries, acting as the quality gate between fabrication and system deployment. Its investments in high-parallel test platforms, advanced probe technologies, and data analytics allow efficient handling of large-volume, high-performance devices. In the AI era, where chips operate at higher power densities and tighter margins, precise electrical testing and screening are essential. KYEC’s role extends beyond traditional back-end services, becoming a key enabler of reliable, production-ready semiconductors that power servers, data centers, and intelligent systems worldwide.

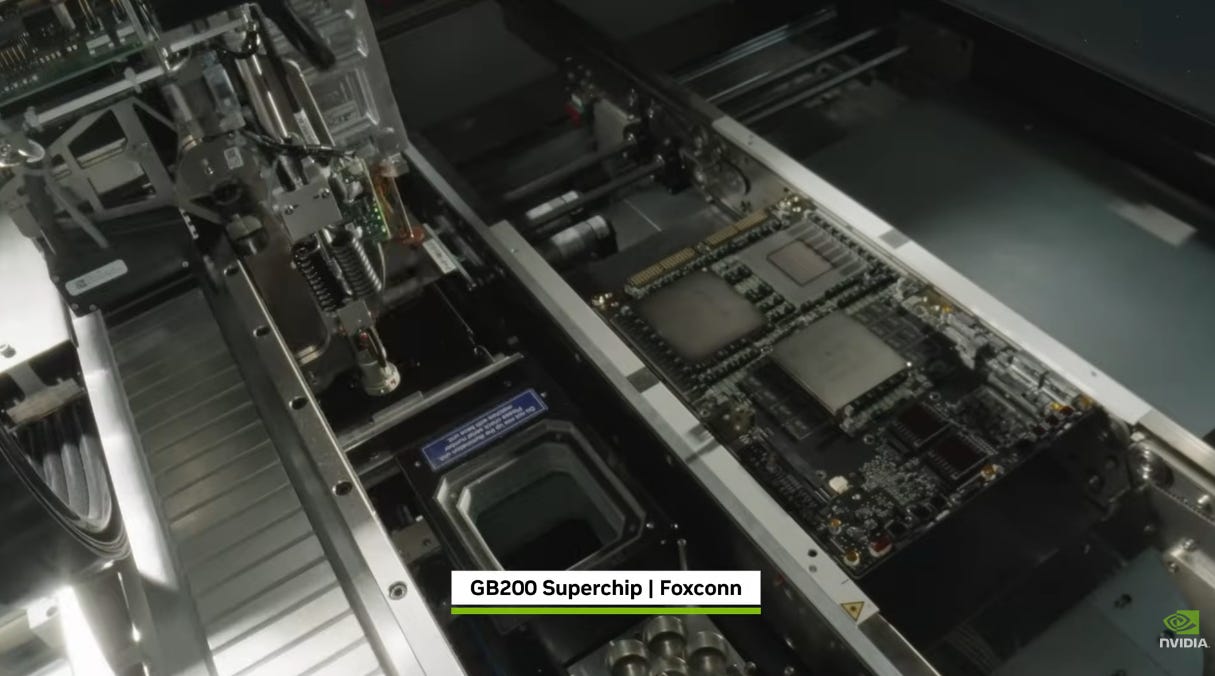

Foxconn:

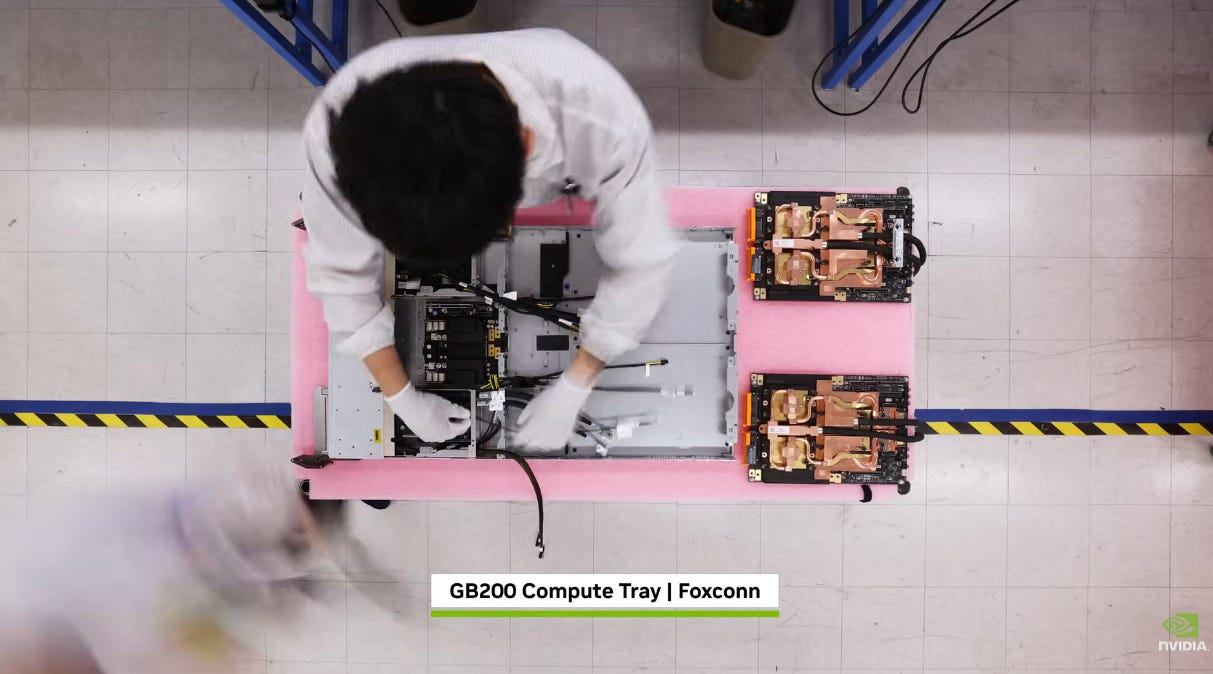

Foxconn is the world’s largest electronics manufacturing services company and a central integrator of AI server hardware. Beyond consumer electronics, Foxconn builds GPU servers, racks, and data center systems for hyperscalers. Its scale allows rapid deployment of complex AI infrastructure. The company integrates boards, mechanicals, power systems, and thermal modules into production-ready platforms.

As AI clusters grow in size and density, Foxconn’s manufacturing engineering, supply chain control, and automation capabilities become increasingly strategic. It effectively converts semiconductor innovation into deployable computing infrastructure.

Wiwynn:

Wiwynn focuses on cloud and hyperscale data center hardware, including AI-optimized servers. The company emphasizes modular design and open hardware architectures. It supports high-density GPU systems and advanced cooling technologies. Wiwynn’s engineering strength lies in large-scale system deployment, ensuring servers operate reliably in demanding data center environments.

As AI infrastructure scales, Wiwynn helps cloud providers translate silicon performance into operational computing capacity.

Wistron:

Wistron is a diversified ODM with strong presence in servers and cloud hardware. It manufactures AI servers, storage systems, and networking devices. Wistron’s value lies in production scale, global logistics, and system integration. It bridges semiconductor supply with final system deployment.

As AI clusters expand, Wistron’s role in board assembly, mechanical integration, and system validation becomes increasingly vital to the AI compute ecosystem.

Pegatron:

Pegatron provides ODM services across computing and server hardware. It manufactures AI servers and data center components, focusing on reliability and cost optimization. Pegatron’s engineering integrates complex electronics with mechanical and thermal systems.

As hyperscale AI infrastructure grows, Pegatron contributes to transforming advanced chips into scalable, production-ready server platforms.

Compal:

Compal is an ODM serving computing, servers, and smart devices. It builds AI-ready server platforms and integrates subsystems such as power modules and cooling. Compal’s strength lies in high-volume production and supply chain management. In AI infrastructure, Compal helps scale hardware deployment from prototype to mass production.

Quanta:

Quanta is one of the world’s most important cloud server ODMs and a core manufacturer behind hyperscale data center infrastructure. The company builds GPU servers, AI racks, and large-scale computing platforms used by major global cloud providers. Quanta’s strengths lie in system architecture, mechanical engineering, and rack-level integration, where performance, power delivery, and thermal design must work in tight coordination.

As AI clusters grow larger and denser, server design becomes a systems engineering challenge rather than simple assembly, and Quanta operates at this intersection of compute, power, and mechanical scalability. Its manufacturing scale and supply chain coordination allow rapid deployment of advanced AI hardware. In the AI era, Quanta’s role is to transform cutting-edge silicon into operational computing infrastructure, ensuring that GPUs and accelerators move from chip form into fully integrated, data-center-ready AI systems.

ASUS:

ASUS is a global computing hardware brand known for motherboards, graphics cards, and high-performance systems, and it is increasingly expanding into AI workstations and edge computing platforms. While hyperscale data centers dominate large-model training, much of AI development, testing, and deployment occurs outside cloud facilities. ASUS supports this layer of the ecosystem by providing powerful desktop workstations, AI-ready PCs, and edge devices optimized for professional workloads. Its engineering expertise in board design, system stability, and thermal performance allows high compute density in compact systems. ASUS bridges consumer hardware innovation with professional computing needs, enabling researchers, developers, and enterprises to run AI models locally.

As AI spreads from centralized clusters into distributed environments, ASUS plays a role in decentralizing AI compute, making advanced processing accessible across industries.

Acer:

Acer is a global PC and computing systems company evolving toward AI-enabled devices and enterprise platforms. As AI workloads increasingly extend beyond data centers into commercial, educational, and industrial environments, Acer integrates AI acceleration into workstations, edge computers, and intelligent devices. The company combines hardware engineering with broad distribution capabilities, allowing AI-capable systems to reach global markets at scale. Acer’s platforms support AI inference, analytics, and productivity applications across sectors. Its strength lies in translating high-performance computing technologies into accessible commercial products.

In the AI hardware ecosystem, Acer contributes to the democratization of AI compute, ensuring that intelligent processing is available not only to hyperscalers but also to enterprises, institutions, and edge deployments worldwide.

MSI:

MSI is a high-performance hardware manufacturer recognized for graphics cards, motherboards, and advanced computing systems. Its expertise in performance tuning, thermal engineering, and power optimization has positioned it well for AI-ready workstations and edge computing platforms. AI workloads demand sustained performance under heavy thermal loads, and MSI’s engineering in cooling design and system stability supports these requirements. The company provides platforms suited for AI development, simulation, and professional visualization. MSI operates at the intersection of enthusiast-grade performance and professional computing, enabling AI practitioners to run demanding workloads outside large data centers.

As AI applications expand across creative, engineering, and research domains, MSI helps deliver compact yet powerful systems capable of supporting compute-intensive tasks.

Gigabyte:

Gigabyte manufactures motherboards, server systems, and AI-focused hardware platforms. It plays a dual role in both consumer high-performance computing and enterprise server infrastructure. The company develops GPU servers, AI edge systems, and high-density platforms designed for compute-intensive workloads. Gigabyte’s engineering focuses on signal integrity, power distribution, and system reliability — all essential for AI hardware where bandwidth and stability are critical. By bridging motherboard-level innovation with full server system integration, Gigabyte supports scalable AI deployment from edge nodes to data centers. Its ability to deliver both components and complete systems allows it to address multiple layers of the AI compute stack.

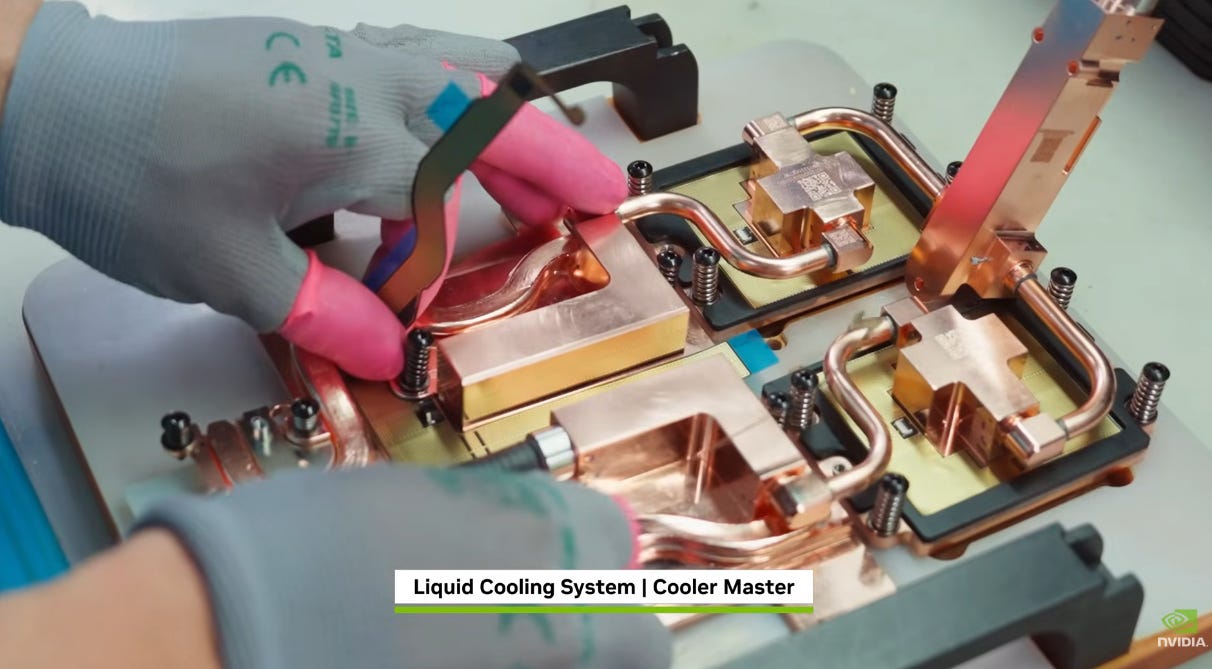

Delta Electronics:

Delta Electronics is a global leader in power supplies and thermal management solutions, both of which are foundational to AI data center operations. AI servers and GPU clusters consume massive amounts of electricity and generate significant heat, making efficient power conversion and cooling critical. Delta develops high-efficiency power systems, data center cooling technologies, and energy management solutions that improve reliability and sustainability. Its expertise enables stable power delivery to dense compute racks while reducing energy loss. As AI infrastructure scales, power efficiency becomes as important as processing performance, positioning Delta as a key enabler of AI data center expansion.

AVC:

AVC specializes in thermal solutions including cooling fans, heat sinks, and advanced airflow modules. AI servers operate under extreme thermal conditions due to high power densities, making cooling a central design constraint. AVC’s products ensure airflow management and heat dissipation, supporting system stability and component longevity.

In modern AI hardware, thermal engineering directly impacts performance, reliability, and energy efficiency. AVC provides the physical cooling infrastructure that allows processors and memory to operate at peak levels without thermal throttling. Its role may be less visible than chip design, but it is essential to sustaining AI system performance.

Foxconn Industrial Internet

Foxconn Industrial Internet focuses on smart manufacturing and industrial internet platforms. It integrates AI, automation, and data analytics into factory environments, transforming production into data-driven systems. By linking manufacturing operations with cloud intelligence, FII enables predictive maintenance, process optimization, and real-time monitoring. Its role in the AI ecosystem lies in digitizing physical production, making manufacturing more adaptive and efficient through intelligent systems.

Advantech

Advantech is a leader in industrial computing and edge AI systems. It develops rugged, application-specific platforms used in manufacturing, healthcare, transportation, and smart cities. While large AI models train in data centers, inference increasingly happens at the edge, closer to real-world environments. Advantech enables this shift by embedding AI capabilities into industrial-grade hardware. Its systems integrate sensors, compute modules, and connectivity, turning AI from a cloud technology into an operational tool in physical industries. Advantech plays a key role in extending AI beyond digital infrastructure into real-world automation and intelligence.

LITEON

Lite-On produces power supplies, optical components, and electronic modules used in servers and data center systems. Its power conversion technologies support the reliable operation of AI hardware, where stable voltage delivery is crucial. Lite-On focuses on efficiency, reliability, and compact design, enabling high-density server configurations. As AI data centers grow in scale, the importance of power modules that can operate continuously under heavy loads increases. Lite-On contributes to the electrical backbone of AI infrastructure, ensuring that advanced computing systems receive consistent and efficient power.

Victory Giant Technology

Victory Giant Technology is a major PCB manufacturer producing high-layer-count, high-precision circuit boards. AI servers require complex PCBs capable of handling high-speed signals, dense routing, and strong power integrity. Victory Giant supplies these foundational components that allow advanced chips, memory, and networking devices to connect reliably. In AI hardware, PCB quality directly affects signal performance and system stability. Victory Giant forms part of the physical substrate layer that supports high-speed computing systems.

Victory Giant Technology, as a technology leader in the PCB industry, has established forward-looking capabilities that enable the manufacturing of ultra-high-layer-count PCBs exceeding 100 layers, with mass production capacity for boards above 70 layers. The company is among the first globally to achieve large-scale production of 6-stage 24-layer HDI products, and possesses advanced technical capabilities in 8-stage 28-layer HDI and 16-layer Any-layer HDI structures. Its technology platform also supports next-generation high-speed communication standards, including PCIe 6.0 and 1.6T optical modules. Products used in server platforms such as Eagle, Birch Stream, and Turin have already entered volume production, while next-generation platforms including Oak Stream and Venice have progressed into the testing phase.

In the fields of computing power and AI servers, Victory Giant Technology is among the first companies globally to achieve large-scale mass production of 6-stage, 24-layer HDI boards, and has already initiated R&D and qualification for 10-stage, 30-layer HDI technology, with line width/spacing advanced to 40/40 μm. Its technical reserves for products exceeding 100 layers also significantly surpass the industry average. In terms of materials innovation, Victory Giant has completed electrical and thermal performance validation of M7 and M8 grade materials in production applications, and is progressing with M9 material qualification to support 224 Gbps high-speed transmission. These developments position the company to fully support next-generation upgrades in AI servers and networking switches.

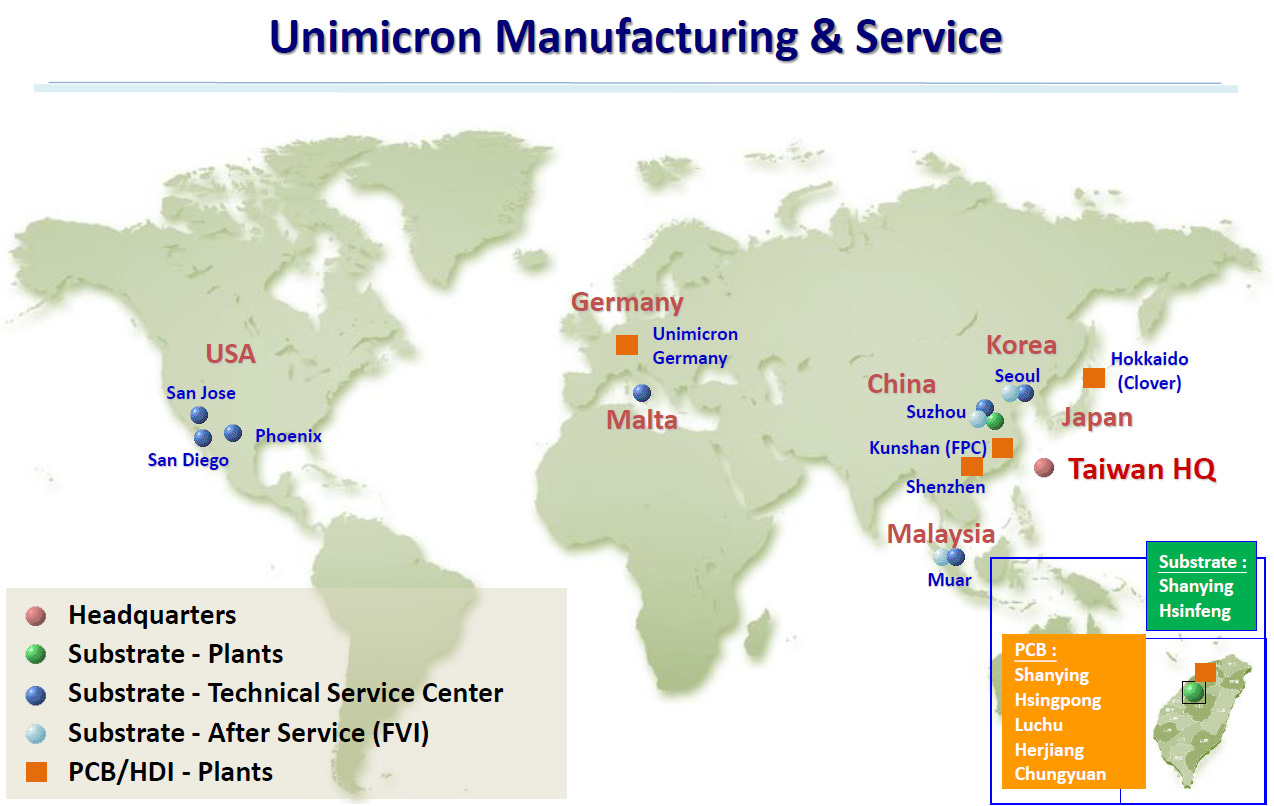

Unimicron:

Unimicron is a leading global manufacturer of advanced printed circuit boards (PCBs) and IC substrates, and a critical supplier in the semiconductor and AI hardware ecosystem. The company provides high-density interconnect (HDI) boards, ABF substrates, and high-layer-count PCBs used in servers, networking equipment, consumer electronics, and high-performance computing platforms. As AI systems demand faster data transmission and higher power delivery, Unimicron’s substrate technologies enable signal integrity, fine-line routing, and reliable electrical performance for advanced processors and high-speed interfaces.

Unimicron plays a key role in bridging semiconductor chips with full system hardware, as substrates and PCBs form the structural and electrical foundation of modern computing devices. Its expertise in materials, multilayer stacking, and precision manufacturing supports next-generation standards such as PCIe, high-speed memory interfaces, and advanced packaging integration. With strong relationships across foundries, OSATs, and system integrators, Unimicron helps ensure that leading-edge silicon can be deployed into scalable AI servers and data center infrastructure, making it an essential player in the global electronics supply chain.

This Dinner Was a Systems Meeting, Not a Social Event

This dinner was a systems meeting, not a social event. When Jensen Huang gathers Taiwan partners, he is not hosting a courtesy banquet but coordinating the physical engine of the AI era. Around that table, discussions implicitly connect the silicon roadmap, advanced packaging capacity, server production ramps, and the evolution of power delivery and cooling architectures, all the way through to global data center deployment.

Each layer of AI infrastructure depends on tight timing: chips must align with packaging availability, server designs must match power density trends, and cooling technologies must evolve alongside compute intensity. In this sense, the dinner functions like a real-time synchronization point for the hardware stack that underpins modern AI systems. It reflects the reality that AI progress is no longer driven by algorithms alone but by the orchestration of manufacturing, engineering, and infrastructure at scale — effectively the operating system of AI hardware being updated through coordination across the supply chain.

Taiwan: The Only Place Where the Entire AI Hardware Stack Comes Together

Why Taiwan is structurally irreplaceable in AI comes down to ecosystem density rather than any single company or technology. Many regions can design advanced chips, and others operate massive cloud platforms, but few places integrate every physical layer required to turn AI ambition into working infrastructure. Taiwan combines leading-edge silicon manufacturing, where advanced logic nodes are produced at scale; world-class packaging capabilities, including CoWoS and a deep OSAT base that enable high-bandwidth chip integration; the largest concentration of AI server ODMs, responsible for building GPU systems and racks for global hyperscalers; high-density data center power expertise, critical as AI clusters push electrical and efficiency limits; advanced thermal ecosystems covering liquid cooling, airflow engineering, and heat dissipation; and full system integration, from board assembly to rack-level and data-center buildout.

Each of these layers is complex on its own, but Taiwan’s advantage lies in their proximity and coordination within a single industrial network. This tight coupling shortens development cycles, accelerates ramp-up, and reduces integration risk. As AI systems grow more power-hungry, bandwidth-intensive, and mechanically complex, success depends less on isolated excellence and more on synchronized execution across the stack — a structure that few other regions can replicate at comparable scale, speed, and manufacturing maturity.

AI is no longer merely a technology cycle driven by faster chips or larger models; it has crossed the threshold into becoming foundational infrastructure, comparable to electricity grids or the global internet — a permanent layer underpinning economies, industries, and daily life. What powers this transition is not software alone but the massive physical stack required to deliver computation at planetary scale. That stack must be manufactured, assembled, powered, cooled, and integrated into operational systems, and this is where Taiwan plays an outsized role.

Across foundries, advanced packaging lines, server manufacturing floors, power electronics plants, and thermal engineering specialists, the island forms one of the world’s densest concentrations of AI hardware capability. The symbolic meaning behind Jensen Huang’s gathering with partners reflects this structural reality: NVIDIA may architect the computational engines of the future, but the transformation of those designs into functioning, large-scale infrastructure happens through Taiwan’s industrial ecosystem.

In the emerging AI era, innovation begins with silicon, but civilization-scale deployment is built through coordinated manufacturing — the future is designed in code and circuits, yet it becomes real through factories, supply chains, and systems engineering working in unison.